I'm using Selenium in Python to perform some web scraping in Chrome. The scraper loads a page, types a search in a searchbox, solves a basic captcha, scrapes the data, and repeats.

Problem

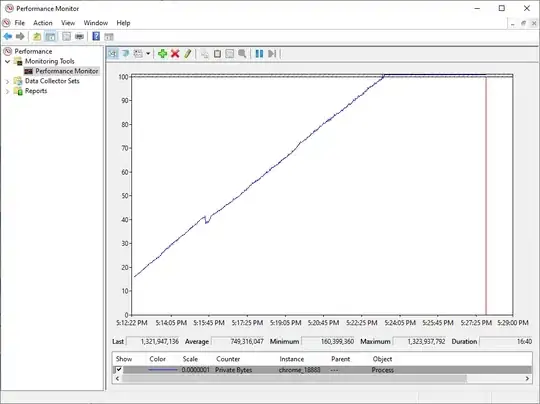

The chrome.exe process memory usage climbs continually, while the Python and chromedriver.exe processes have stable memory usage.

The memory leaked by Chrome is proportional to the number of pages loaded, so after around 600 pages scraped my Chrome window will run out of memory. "Google Chrome ran out of memory while trying to display this webpage."

My workaround

Workaround 1: Set a counter to load N pages before running driver.quit(), followed by driver = webdriver.Chrome() to relaunch the browser. But the memory quickly fills up again.

Workaround 2: Use Firefox with gecko webdriver instead. This has constant memory usage without a memory leak.

Version Details

OS: Windows 10 Education 1909 18363.836

Python Version: 3.8.3

Selenium Version: 3.141.0

Chrome Version: 83.0.4103.61 (Official Build) (64-bit)

ChromeDriver: 83.0.4103.39