I've been trying to create a new spark session with Livy 0.7 server that runs on Ubuntu 18.04. On that same machine I have a running spark cluster with 2 workers and I'm able to create a normal spark-session.

My problem is that after running the following request to Livy server the session stays stuck on starting state:

import json, pprint, requests, textwrap

host = 'http://localhost:8998'

data = {'kind': 'spark'}

headers = {'Content-Type': 'application/json'}

r = requests.post(host + '/sessions', data=json.dumps(data), headers=headers)

r.json()

I can see that the session is starting and created the spark session from the session log:

20/06/03 13:52:31 INFO SparkEntries: Spark context finished initialization in 5197ms

20/06/03 13:52:31 INFO SparkEntries: Created Spark session.

20/06/03 13:52:46 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (xxx.xx.xx.xxx:1828) with ID 0

20/06/03 13:52:47 INFO BlockManagerMasterEndpoint: Registering block manager xxx.xx.xx.xxx:1830 with 434.4 MB RAM, BlockManagerId(0, xxx.xx.xx.xxx, 1830, None)

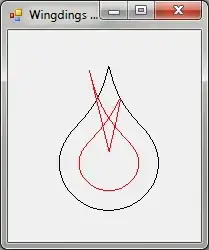

and also from the spark master UI:

and after the livy.rsc.server.idle-timeout is reached the session log then outputs:

20/06/03 14:28:04 WARN RSCDriver: Shutting down RSC due to idle timeout (10m).

20/06/03 14:28:04 INFO SparkUI: Stopped Spark web UI at http://172.17.52.209:4040

20/06/03 14:28:04 INFO StandaloneSchedulerBackend: Shutting down all executors

20/06/03 14:28:04 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

20/06/03 14:28:04 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

20/06/03 14:28:04 INFO MemoryStore: MemoryStore cleared

20/06/03 14:28:04 INFO BlockManager: BlockManager stopped

20/06/03 14:28:04 INFO BlockManagerMaster: BlockManagerMaster stopped

20/06/03 14:28:04 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

20/06/03 14:28:04 INFO SparkContext: Successfully stopped SparkContext

20/06/03 14:28:04 INFO SparkContext: SparkContext already stopped.

I already tried increasing the driver timeout with no luck, and didn't find any known issues like that my guess it has something to do with the spark driver connectivity to the rsc but I have no idea where to configure that

Anyone knows the reason/solution for that?