Unfortunately, I didn't quite get as fast as I wanted to be - so I will leave the question open in case someone knows a better answer.

Where did the problem originate.

I was looking into how to implement inclusive scan in-place on top of AVX2 SIMD extensions. My solution is entirely based on: @Zboson answer.

[a b c d ]

+ [0 a b c ]

= [a (a + b) (b + c) (c + d) ]

+ [0 0 a (a + b) ]

= [a (a + b) (a + b + c) (a + b + c + d) ]

Every one range algorithm that I implemented before worked well with the following iteration pattern (sudo code):

auto aligned_f = previous_aligned_address(f);

auto aligned_l = previous_aligned_address(l);

ignore_first_n ignore_first{f - aligned_f};

if (aligned_f != aligned_l) {

step(aligned_f, ignore_first); // Do a simd step, ignoring everything

// between aligned_f and f.

aligned_f += register_width;

ignore_first = ignore_first_n{0};

// Big unrolled loop.

main_loop(aligned_f, aligned_l);

if (aligned_f == aligned_l) return;

}

ignore_last_n ignore_last {aligned_l + register_width - l};

ignore_first_last ignore = combine(ignore_first, ignore_last);

// Do a simd step, ignoring everything between aligned_l and l.

// + handle the case when register is bigger than the array size.

step(aligned_l, ignore);

(If you do not know why it's OK to do this - see).

As both @PeterCordes and @PaulR mentioned, if you change the iteration pattern - mixin some of the other values and do a plain unaligned store and this is probably what I'll have to do. Then you can do at most one true masked store - only when register does not fit completely.

However, that is more assembly generated and I was not sure if I implemented store(address, register, ignore) in the most efficient way possible - hence was my question.

UPDATE: did try this, even without mixing anything in, you can just first load 2 overlapping registers and then store them back. Made things slightly worse. This does not seem to be a good idea, at least for inclusive scan.

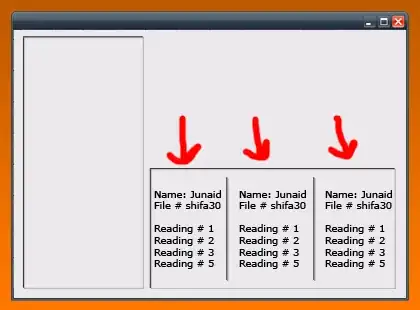

Measurements

The fast enough I defined as "beat the scalar version on 40 bytes of data" - 40 chars, 20 shorts and 10 integers. You might notice that 40 bytes > then the register size - so I would have to add an even smaller measurement for a more complicated iteration pattern.

I show the measurements for 2 cases <256, 1> - use 256 bit regestisters, no unrolling, <256, 2> - unroll the main loop twice.

NOTE: In benchmarks I account for possible code alignment issues by aligning benchmarking code in 64 different ways and picking minimum value.

_mm_maskmoveu_si128

Originally I went with _mm256_maskstore for sizeof(T) >= 4 and 2 _mm_maskmoveu_si128 for the rest.

This, as you can see - performed extremely poor - for char we loose to the scalar code about 10 times, about 20 times for short and 2 times for int.

Use memcpy for char and short

I tried a few different things: use _mm256_maskstore for short, memcpy for int, write my own inline memcpy for my this case. The best i got was: memcpy for char and short and maskstore for int.

It's a win for char, couple of nanoseconds difference between using no unrolling and unrolling twice, about a 30% loss for short and a 50% loss for int.

So, at the very least with my implementation of store(ptr, reg, ignore) I need to do a different iteration pattern if I don't want to peel loops.

Listing for store(addr, reg, ignore)

NOTE: I removed wrappers and adapters, might have added a few bugs.

// Only showing one ignore_broadcast, they are very similar and

// are actually generated with templates

template <register_256 Register, std::same<int> T>

inline __m256i ignore_broadcast(ignore_first_n ignore) {

__m256i idxs = _mm256_set_epi32(7, 6, 5, 4, 3, 2, 1, 0);

__m256i n_broadcasted = _mm256_set1_epi32(ignore.n - 1);

return _mm256_cmpgt_epi32(idxs, n_broadcasted);

}

template <template Register, typename T, typename Ignore>

void store(Register reg, T* ptr, Ignore ignore) {

if constexpr (sizeof(T) >= 4) {

const auto mask = ignore_broadcast<Register, T>(ignore);

_store::maskstore(ptr, mask, reg);

return;

}

std::size_t start = 0, n = sizeof(reg) / sizeof(T);

if constexpr (std::is_same_v<Ignore, ignore_first_n>) {

start += ignore.n;

n -= ignore.n;

} else if constexpr (std::is_same_v<Ignore, ignore_last_n>) {

n -= ignore.n;

} else {

static_assert(std::is_same_v<Ignore, ignore_first_last>);

start += ignore.first_n;

n -= ignore.first_n + ignore.last_n;

}

// This requires to store the register on the stack.

std::memcpy(raw_ptr + start, reinterpret_cast<T*>(®) + start, n * sizeof(T));

}

What does memcpy do

This is the memcpy that gets called.

It implements copy for under 32 bytes in the following way:

#if VEC_SIZE > 16

/* From 16 to 31. No branch when size == 16. */

L(between_16_31):

vmovdqu (%rsi), %xmm0

vmovdqu -16(%rsi,%rdx), %xmm1

vmovdqu %xmm0, (%rdi)

vmovdqu %xmm1, -16(%rdi,%rdx)

ret

#endif

L(between_8_15):

/* From 8 to 15. No branch when size == 8. */

movq -8(%rsi,%rdx), %rcx

movq (%rsi), %rsi

movq %rcx, -8(%rdi,%rdx)

movq %rsi, (%rdi)

ret

L(between_4_7):

/* From 4 to 7. No branch when size == 4. */

movl -4(%rsi,%rdx), %ecx

movl (%rsi), %esi

movl %ecx, -4(%rdi,%rdx)

movl %esi, (%rdi)

ret

L(between_2_3):

/* From 2 to 3. No branch when size == 2. */

movzwl -2(%rsi,%rdx), %ecx

movzwl (%rsi), %esi

movw %cx, -2(%rdi,%rdx)

movw %si, (%rdi)

ret

So basically - take the biggest register that fits and do two overlapping stores.

I tried to do that inline - calling memcpy was faster - maybe I didn't do right though.

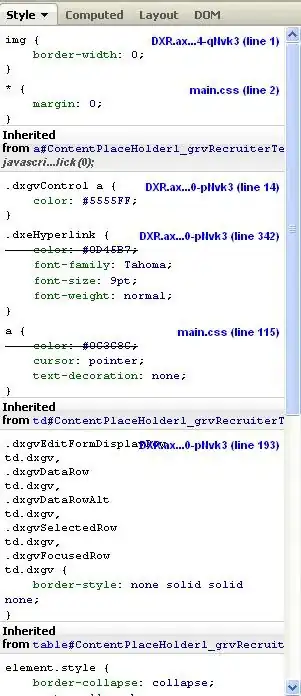

Assembly and code

Reading my code might be a bit tricky, especially because I'm relying on eve library that is not yet open-source.

So I compiled and published couple of assembly listings:

Complete assembly for int, no unrolling

Complete assembly for short, no unrolling

My code can be found here

PS: Measuring big size

If you are interested, on a big enough array doing this type of vectorisation is a good win. On 10'000 bytes for example.

About 5 times for chars, 3 times for shorts and 2 times for ints.

PS: On unrolling

I didn't come up with some clever unrolling. The very basic unrolling twice gives about 10% win for 10000 bytes of short. Unrolling more didn't help.

The reason why the win is this small, I suspect, is because the algorithm is quite complicated.