First I create some toy data:

n_samples=20

X=np.concatenate((np.random.normal(loc=2, scale=1.0, size=n_samples),np.random.normal(loc=20.0, scale=1.0, size=n_samples),[10])).reshape(-1,1)

y=np.concatenate((np.repeat(0,n_samples),np.repeat(1,n_samples+1)))

plt.scatter(X,y)

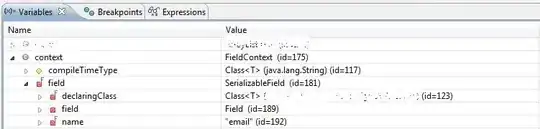

Below the graph to visualize the data:

Then I train a model with LinearSVC

from sklearn.svm import LinearSVC

svm_lin = LinearSVC(C=1)

svm_lin.fit(X,y)

My understand for C is that:

- If

Cis very big, then misclassifications will not be tolerated, because the penalty will be big. - If

Cis small, misclassifications will be tolerated to make the margin (soft margin) larger.

With C=1, I have the following graph (the orange line represent the predictions for given x values), and we can see the decision boundary is around 7, so C=1 is big enough to not let any misclassification.

X_test_svml=np.linspace(-1, 30, 300).reshape(-1,1)

plt.scatter(X,y)

plt.scatter(X_test_svml,svm_lin.predict(X_test_svml),marker="_")

plt.axhline(.5, color='.5')

With C=0.001 for example, I am expecting the decision boundary to go to right-hand side, around 11 for example, but I got this:

I tried with another module with the SVC function:

from sklearn.svm import SVC

svc_lin = SVC(kernel = 'linear', random_state = 0,C=0.01)

svc_lin.fit(X,y)

I successfully got the desired output:

And with my R code, I got something more understandable. (I used svm function from e1071 package)