Trying to stream bitmaps over WebRtc. My Capturer class looks approximately like this:

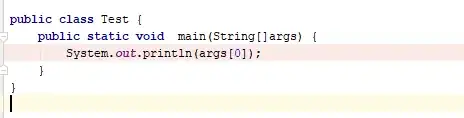

public class BitmapCapturer implements VideoCapturer, VideoSink {

private Capturer capturer;

private int width;

private int height;

private SurfaceTextureHelper textureHelper;

private Context appContext;

@Nullable

private CapturerObserver capturerObserver;

@Override

public void initialize(SurfaceTextureHelper surfaceTextureHelper,

Context context, CapturerObserver capturerObserver) {

if (capturerObserver == null) {

throw new RuntimeException("capturerObserver not set.");

} else {

this.appContext = context;

this.textureHelper = surfaceTextureHelper;

this.capturerObserver = capturerObserver;

this.capturer = new Capturer();

this.textureHelper.startListening(this);

}

}

@Override

public void startCapture(int width, int height, int fps) {

this.width = width;

this.height = height;

long start = System.nanoTime();

textureHelper.setTextureSize(width, height);

int[] textures = new int[1];

GLES20.glGenTextures(1, textures, 0);

GLES20.glBindTexture(GLES20.GL_TEXTURE_2D, textures[0]);

Matrix matrix = new Matrix();

matrix.preTranslate(0.5f, 0.5f);

matrix.preScale(1f, -1f);

matrix.preTranslate(-0.5f, -0.5f);

YuvConverter yuvConverter = new YuvConverter();

TextureBufferImpl buffer = new TextureBufferImpl(width, height,

VideoFrame.TextureBuffer.Type.RGB, textures[0], matrix,

textureHelper.getHandler(), yuvConverter, null);

this.capturerObserver.onCapturerStarted(true);

this.capturer.startCapture(new ScreenConfig(width, height),

new CapturerCallback() {

@Override

public void onFrame(Bitmap bitmap) {

textureHelper.getHandler().post(() -> {

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MIN_FILTER, GLES20.GL_NEAREST);

GLES20.glTexParameteri(GLES20.GL_TEXTURE_2D, GLES20.GL_TEXTURE_MAG_FILTER, GLES20.GL_NEAREST);

GLUtils.texImage2D(GLES20.GL_TEXTURE_2D, 0, bitmap, 0);

long frameTime = System.nanoTime() - start;

VideoFrame videoFrame = new VideoFrame(buffer.toI420(), 0, frameTime);

capturerObserver.onFrameCaptured(videoFrame);

videoFrame.release();

});

}

});

}

@Override

public void onFrame(VideoFrame videoFrame) {

capturerObserver.onFrameCaptured(videoFrame);

}

@Override

public void stopCapture() throws InterruptedException {

}

@Override

public void changeCaptureFormat(int i, int i1, int i2) {

}

@Override

public void dispose() {

}

@Override

public boolean isScreencast() {

return true;

}}

Resulting stream looks in following way:

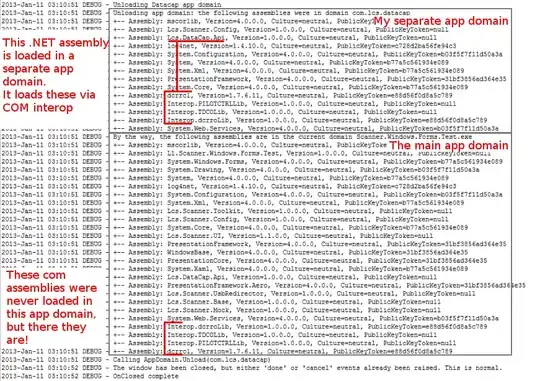

Below, I'll try to give the results of my experiments so far.

In case frame rotated to 90 degrees - stream looks normal.

VideoFrame videoFrame = new VideoFrame(buffer.toI420(), 90, frameTime);

Tried to swap TextureBuffer size

TextureBufferImpl buffer = new TextureBufferImpl(height, width, VideoFrame.TextureBuffer.Type.RGB, textures[0], matrix, textureHelper.getHandler(), yuvConverter, null);

Also tried to pass height as both height and width

TextureBufferImpl buffer = new TextureBufferImpl(height, height, VideoFrame.TextureBuffer.Type.RGB, textures[0], matrix, textureHelper.getHandler(), yuvConverter, null);

And I'm confused here. It seems that WebRtc somehow expects horizontal frame despite all sizes were set to vertical. I've tried to log all frame buffer sizes inside WebRtc library up to video encoder and they are correct. The issue doesn't seem to be with convertation method, because except this I've tried to convert using ARGBToI420 from libyuv. The produced results were the same.

Would be extremely grateful for any assistance