I tried a lot of solution both in R and Python and gave up.

I am trying to read a huge .csv file (1.6 GB).

I cannot even import it with pandas (managed to import with R).

I start from simple importing. pd.read_csv() with reading of a file name gives the same result.

I receive an error: UnicodeDecodeError: 'utf-8' codec can't decode byte 0xb5 in position 0: invalid start byte

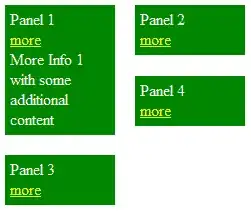

Google this error and go this this question

From this question I try solutions as

`encoding = 'unicode_escape'`

It give me an error:

UnicodeDecodeError: 'unicodeescape' codec can't decode byte 0x5c in position 0: \ at end of string

This solution

`pd.read_csv('file_name.csv', engine='python')`

gives me an error:

`ParserError: NULL byte detected. This byte cannot be processed in Python's native csv library at the moment, so please pass in engine='c' instead`

If I set engine as 'c'

`taxi_2020 = pd.read_csv(path, engine = 'c')`

I again have

UnicodeDecodeError: 'utf-8' codec can't decode byte 0xb5 in position 0: invalid start byte

I also tried to use this

import sys

reload(sys)

sys.setdefaultencoding("ISO-8859-1")

but it is not relevant for Python 3.6

The more I try, the bigger is the decision tree of my errors. R imports this data, but I also has issues with it, when I try to work with character columns. It says 'input string 1 is invalid UTF-8', when I try to convert a character column into date.

I am new to Python, so I would greatly appreciate some help with a simple and universal solution how to import such data. The dataset is huge, not sure if I can upload it anywhere.