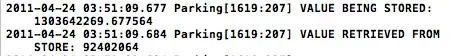

I'm building a many to many network in Keras, using an LSTM. I have sequences of varying length (labels always have the same length as the sequence they describe). To handle the varying length and after searching on other SO posts I've found padding + masking to be the best solution.

So I have n (874) samples of max_len (24) padded sequences with 25 features each. But how do I handle my labels ? Do I pad them too ?

If I pad them like in the same way as my X (with the same special value) I get this :

X_train shape : (873, 24, 25)

y_train shape : (873, 24)

All is fine except I get the following error :

ValueError: Can not squeeze dim[1], expected a dimension of 1, got 24 for '{{node binary_crossentropy/weighted_loss/Squeeze}} = Squeeze[T=DT_FLOAT, squeeze_dims=[-1]](Cast_1)' with input shapes: [1,24].

Searching up this error leads to post about removing retun_sequences=True from my LSTM layer, except I don't want that since each of my timesteps are labelled...

And if I don't pad them, they can't be converted to a tensor to be used by tensorflow.

Edit:

Explanatory illustration of the architecture I want to achieve, courtesy of this answer :https://stackoverflow.com/a/52092176/7732923