I am training an object detection model with Azure customvision.ai. The model output is with tensorflow, either saved model .pb, .tf or .tflite.

The model output type is designated as float32[1,13,13,50]

I then push the .tflite onto a Google Coral Edge device and attempt to run it (previous .tflite models trained with Google Cloud worked, but I'm now bound to corporate Azure and need to use customvision.ai). These commands are with

$ mdt shell

$ export DEMO_FILES="/usr/lib/python3/dist*/edgetpu/demo"

$ export DISPLAY=:0 && edgetpu_detect \

$ --source /dev/video1:YUY2:1280x720:20/1 \

$ --model ${DEMO_FILES}/model.tflite

Finally, the model attempts to run, but results in a ValueError

'This model has a {}.'.format(output_tensors_sizes.size)))

ValueError: Detection model should have 4 output tensors! This model has 1.

What is happening here? How do I reshape my tensorflow model to match the device requirements of 4 output tensors?

Edit, this outputs a tflite model, but still has only one output

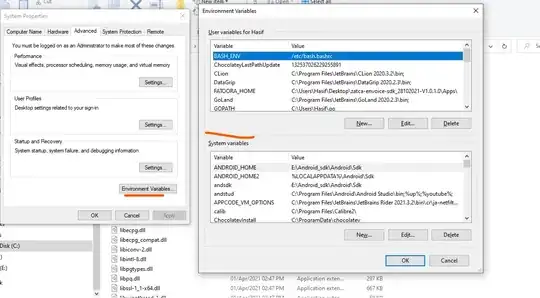

python tflite_convert.py \

--output_file=model.tflite \

--graph_def_file=saved_model.pb \

--saved_model_dir="C:\Users\b0588718\AppData\Roaming\Python\Python37\site-packages\tensorflow\lite\python" \

--inference_type=FLOAT \

--input_shapes=1,416,416,3 \

--input_arrays=Placeholder \

--output_arrays='TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' \

--mean_values=128 \

--std_dev_values=128 \

--allow_custom_ops \

--change_concat_input_ranges=false \

--allow_nudging_weights_to_use_fast_gemm_kernel=true