I have built and tested two convolutional Neural Network models (VGG-16 and 3-layer CNN) to predict classification of lung CT scans for COVID-19.

Prior to the classification, I've performed image segmentation via k-means clustering on images to try to improve the classification performance.

The segmented images look like below.

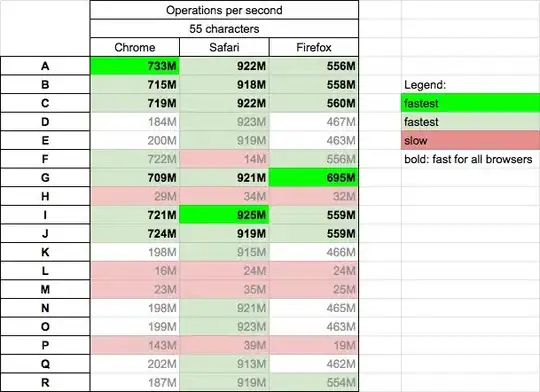

And I've trained and evaluated VGG-16 model on both segmented images and raw images separately. And lastly, trained and evaluated a 3-layer CNN on the segmented images only. Below is the results for their train/validation loss and accuracy.

For the simple 3-layer CNN model, I can clearly see that the model is trained well and also it starts to overfit once epochs are over 2. But, I don't understand how validation accuracy of the VGG model doesn't look like an exponential curve instead it looks like a horizontally straight line or a fluctuating horizontal line. And besides, the simple 3-layer CNN models seems to perform better. Is this due to gradient vanishing in VGG model ? Or the image itself is simple that deep architecture doesn't benefit? I'd appreciate if you could share your knowledge on such learning behaviour of the models.

This is the code for the VGG-16 model:

# build model

img_height = 256

img_width = 256

model = Sequential()

model.add(Conv2D(input_shape=(img_height,img_width,1),filters=64,kernel_size=(3,3),padding="same", activation="relu"))

model.add(Conv2D(filters=64,kernel_size=(3,3),padding="same", activation="relu"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=128, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=128, kernel_size=(3,3), padding="same", activation="relu"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same", activation="relu"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same", activation="relu"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Flatten())

model.add(Dense(units=4096,activation="relu"))

model.add(Dense(units=4096,activation="relu"))

model.add(Dense(units=1, activation="sigmoid"))

opt = Adam(lr=0.001)

model.compile(optimizer=opt, loss=keras.losses.binary_crossentropy, metrics=['accuracy'])

And this is a code for the 3-layer CNN.

# build model

model2 = Sequential()

model2.add(Conv2D(32, 3, padding='same', activation='relu',input_shape=(img_height, img_width, 1)))

model2.add(MaxPool2D())

model2.add(Conv2D(64, 5, padding='same', activation='relu'))

model2.add(MaxPool2D())

model2.add(Flatten())

model2.add(Dense(128, activation='relu'))

model2.add(Dense(1, activation='sigmoid'))

opt = Adam(lr=0.001)

model2.compile(optimizer=opt, loss=keras.losses.binary_crossentropy, metrics=['accuracy'])

Thank you!