As per my understanding, there will be one job for each action in Spark.

But often I see there are more than one jobs triggered for a single action.

I was trying to test this by doing a simple aggregation on a dataset to get the maximum from each category ( here the "subject" field)

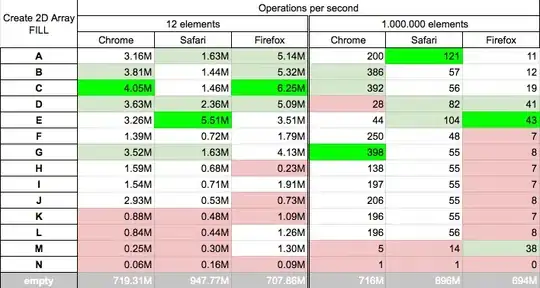

While examining the Spark UI, I can see there are 3 "jobs" executed for the groupBy operation, while I was expecting just one.

Can anyone help me to understand why there is 3 instead of just 1?

students.show(5)

+----------+--------------+----------+----+-------+-----+-----+

|student_id|exam_center_id| subject|year|quarter|score|grade|

+----------+--------------+----------+----+-------+-----+-----+

| 1| 1| Math|2005| 1| 41| D|

| 1| 1| Spanish|2005| 1| 51| C|

| 1| 1| German|2005| 1| 39| D|

| 1| 1| Physics|2005| 1| 35| D|

| 1| 1| Biology|2005| 1| 53| C|

| 1| 1|Philosophy|2005| 1| 73| B|

// Task : Find Highest Score in each subject

val highestScores = students.groupBy("subject").max("score")

highestScores.show(10)

+----------+----------+

| subject|max(score)|

+----------+----------+

| Spanish| 98|

|Modern Art| 98|

| French| 98|

| Physics| 98|

| Geography| 98|

| History| 98|

| English| 98|

| Classics| 98|

| Math| 98|

|Philosophy| 98|

+----------+----------+

only showing top 10 rows

While examining the Spark UI, I can see there are 3 "jobs" executed for the groupBy operation, while I was expecting just one.

Can anyone help me to understand why there is 3 instead of just 1?

Can anyone help me to understand why there is 3 instead of just 1?

== Physical Plan ==

*(2) HashAggregate(keys=[subject#12], functions=[max(score#15)])

+- Exchange hashpartitioning(subject#12, 1)

+- *(1) HashAggregate(keys=[subject#12], functions=[partial_max(score#15)])

+- *(1) FileScan csv [subject#12,score#15] Batched: false, Format: CSV, Location: InMemoryFileIndex[file:/C:/lab/SparkLab/files/exams/students.csv], PartitionFilters: [], PushedFilters: [], ReadSchema: struct<subject:string,score:int>