I am using Laravel Horizon and Redis and I am trying to throttle it. I am using an external API that has a rate limit of 100 requests per minute. I need to make about 700 requests. I have it setup so that every job I add to the queue only performs one API call in the job itself. So if I throttle the queue I should be able to stay within the limits. For some reason no throttling is happening and it instead blows through the queue (thus triggering many API errors of course). However the throttle works locally just not on my server.

I was originally trying to throttle per Laravel's queue documentation but could only get it to work locally so I swapped to trying out the laravel-queue-rate-limit package on Github. As per the README I added the following to my queue.php config file:

'rateLimits' => [

'default' => [ // queue name

'allows' => 75, // 75 job

'every' => 60 // per 60 seconds

]

],

For some reason the throttling works properly when I run it on my local Ubuntu environment, but it does not work on my server (also Ubuntu). On the server it just blows through the queue as if there is no throttle in place.

Is there something I am doing wrong or maybe a better way to handle a rate limited external API?

Edit 1:

config/horizon.php

'environments' => [

'production' => [

'supervisor-1' => [

'connection' => 'redis',

'queue' => ['default'],

'balance' => 'simple',

'processes' => 3,

'tries' => 100,

],

],

One of the handle's that starts most jobs:

public function handle()

{

$updatedPlayerIds = [];

foreach ($this->players as $player) {

$playerUpdate = Player::updateOrCreate(

[

'id' => $player['id'],

],

[

'faction_id' => $player['faction_id'],

'name' => $player['name'],

]

);

// Storing id's of records updated

$updatedPlayerIds[] = $playerUpdate->id;

// If it's a new player or the player was last updated awhile ago, then get new data!

if ($playerUpdate->wasRecentlyCreated ||

$playerUpdate->last_complete_update_at == null ||

Carbon::parse($playerUpdate->last_complete_update_at)->diffInHours(Carbon::now()) >= 6) {

Log::info("Updating '{$playerUpdate->name}' with new data", ['playerUpdate' => $playerUpdate]);

UpdatePlayer::dispatch($playerUpdate);

} else {

// Log::debug("Player data fresh, no update performed", ['playerUpdate' => $playerUpdate]);

}

}

//Delete any ID's that were not updated via the API

Player::where('faction_id', $this->faction->id)->whereNotIn('id', $updatedPlayerIds)->delete();

}

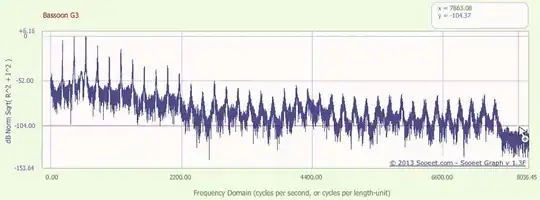

Also, here is a rough diagram I made trying to illustrate how I have multiple job PHP files that end up spawned in a short amount of time, especially ones like the updatePlayer() which are often spawned 700 times.