I have a DataFrame of time series with second resolution, which I save into a CSV file:

import pandas as pd

dr = pd.date_range('01/01/2020 9:00', '01/01/2020 9:10', freq='1s')

df = pd.DataFrame(index=dr, data=range(len(dr)))

df.to_csv('some_data.csv', header=False)

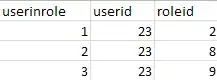

I can then open it in Excel and everything looks good:

If I then save the file in Excel (without changing anything), on reopening the seconds are rounded down to 0:

Looking at the Excel-saved file in notepad shows that the seconds have been lost:

1/1/2020 9:00,0

1/1/2020 9:00,1

1/1/2020 9:00,2

...

The change occurs when saving as a copy or overwriting. And oddly, if you keep the original document open after saving it, you will still see the seconds preserved, until you close and reopen.

In context, I am writing files for other (non-Python-using) users who will likely be using Excel to interact with their data. And even resizing a column prompts for a save, so I find it very likely that they could be inadvertently losing data.

While I used Python to create this example, I have seen the issue with timestamps written in other languages as well.

Is there a better way to be logging time series data that prevents this? Or is there a fix in Excel (preferably a permanent option or setting) that I could share with users who get data in this format?