I'm running a vanilla AWS lambda function to count the number of messages in my RabbitMQ task queue:

import boto3

from botocore.vendored import requests

cloudwatch_client = boto3.client('cloudwatch')

def get_queue_count(user="user", password="password", domain="<my domain>/api/queues"):

url = f"https://{user}:{password}@{domain}"

res = requests.get(url)

message_count = 0

for queue in res.json():

message_count += queue["messages"]

return message_count

def lambda_handler(event, context):

metric_data = [{'MetricName': 'RabbitMQQueueLength', "Unit": "None", 'Value': get_queue_count()}]

print(metric_data)

response = cloudwatch_client.put_metric_data(MetricData=metric_data, Namespace="RabbitMQ")

print(response)

Which returns the following output on a test run:

Response:

{

"errorMessage": "2020-06-30T19:50:50.175Z d3945a14-82e5-42e5-b03d-3fc07d5c5148 Task timed out after 15.02 seconds"

}

Request ID:

"d3945a14-82e5-42e5-b03d-3fc07d5c5148"

Function logs:

START RequestId: d3945a14-82e5-42e5-b03d-3fc07d5c5148 Version: $LATEST

/var/runtime/botocore/vendored/requests/api.py:72: DeprecationWarning: You are using the get() function from 'botocore.vendored.requests'. This dependency was removed from Botocore and will be removed from Lambda after 2021/01/30. https://aws.amazon.com/blogs/developer/removing-the-vendored-version-of-requests-from-botocore/. Install the requests package, 'import requests' directly, and use the requests.get() function instead.

DeprecationWarning

[{'MetricName': 'RabbitMQQueueLength', 'Value': 295}]

END RequestId: d3945a14-82e5-42e5-b03d-3fc07d5c5148

You can see that I'm able to interact with the RabbitMQ API just fine--the function hangs when trying to post the metric.

The lambda function uses the IAM role put-custom-metric, which uses the policies recommended here, as well as CloudWatchFullAccess for good measure.

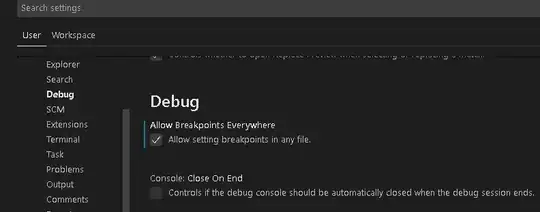

Resources on my internal load balancer, where my RabbitMQ server lives, are protected by a VPN, so it's necessary for me to associate this function with the proper VPC/security group. Here's how it's setup right now (I know this is working, because otherwise the communication with RabbitMQ would fail):

I read this post where multiple contributors suggest increasing the function memory and timeout settings. I've done both of these, and the timeout persists.

I read this post where multiple contributors suggest increasing the function memory and timeout settings. I've done both of these, and the timeout persists.

I can run this locally without any issue to create the metric on CloudWatch in less than 5 seconds.