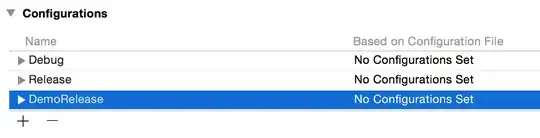

My question is related to this post and I have also tried to implement solution from here and here. Down below I also give my try of implementing the code according to these solutions (my implementation/output is not correct). My code is as follows, using the paper, rock, scissors data:

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/rps.zip \

-O /tmp/rps.zip

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/rps-test-set.zip \

-O /tmp/rps-test-set.zip

import os

import zipfile

local_zip = '/tmp/rps.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp/')

zip_ref.close()

local_zip = '/tmp/rps-test-set.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp/')

zip_ref.close()

rock_dir = os.path.join('/tmp/rps/rock')

paper_dir = os.path.join('/tmp/rps/paper')

scissors_dir = os.path.join('/tmp/rps/scissors')

print('total training rock images:', len(os.listdir(rock_dir)))

print('total training paper images:', len(os.listdir(paper_dir)))

print('total training scissors images:', len(os.listdir(scissors_dir)))

rock_files = os.listdir(rock_dir)

print(rock_files[:10])

paper_files = os.listdir(paper_dir)

print(paper_files[:10])

scissors_files = os.listdir(scissors_dir)

print(scissors_files[:10])

import tensorflow as tf

import keras_preprocessing

from keras_preprocessing import image

from keras_preprocessing.image import ImageDataGenerator

TRAINING_DIR = "/tmp/rps/"

training_datagen = ImageDataGenerator(

rescale = 1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest')

VALIDATION_DIR = "/tmp/rps-test-set/"

validation_datagen = ImageDataGenerator(rescale = 1./255)

train_generator = training_datagen.flow_from_directory(

TRAINING_DIR,

target_size=(150,150),

class_mode='categorical',

batch_size=126

)

validation_generator = validation_datagen.flow_from_directory(

VALIDATION_DIR,

target_size=(150,150),

class_mode='categorical',

batch_size=126

)

model = tf.keras.models.Sequential([

# Note the input shape is the desired size of the image 150x150 with 3 bytes color

# This is the first convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu', input_shape=(150, 150, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

# The second convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The third convolution

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The fourth convolution

tf.keras.layers.Conv2D(128, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# Flatten the results to feed into a DNN

tf.keras.layers.Flatten(),

tf.keras.layers.Dropout(0.5),

# 512 neuron hidden layer

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(3, activation='softmax')

])

model.summary()

model.compile(loss = 'categorical_crossentropy', optimizer='rmsprop', metrics=[tf.keras.metrics.Recall()])

history = model.fit(train_generator, epochs=25, steps_per_epoch=20, validation_data = validation_generator, verbose = 1, validation_steps=3)

model.save("rps.h5")

The output shows a model with quite high fit.I now want to test this again complete new data: (Please note, unfortunately, that this is named "validation" data)

import shutil

import glob

import numpy as np

import os as os

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/rps-validation.zip \

-O /tmp/rps-validation-set.zip

local_zip = '/tmp/rps-validation-set.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('/tmp/rps-validation-set')

zip_ref.close()

os.mkdir("/tmp/rps-validation-set/paper/")

os.mkdir("/tmp/rps-validation-set/rock/")

os.mkdir("/tmp/rps-validation-set/scissors/")

dest_dir = "/tmp/rps-validation-set/paper"

for file in glob.glob(r'/tmp/rps-validation-set/paper*.png'):

print(file)

shutil.move(file, dest_dir)

dest_dir = "/tmp/rps-validation-set/rock"

for file in glob.glob(r'/tmp/rps-validation-set/rock*.png'):

print(file)

shutil.move(file, dest_dir)

dest_dir = "/tmp/rps-validation-set/scissors"

for file in glob.glob(r'/tmp/rps-validation-set/scissors*.png'):

print(file)

shutil.move(file, dest_dir)

!rm -r /tmp/rps-validation-set/.ipynb_checkpoints

new_DIR = "/tmp/rps-validation-set/"

new_datagen = ImageDataGenerator(rescale = 1./255)

new_generator = new_datagen.flow_from_directory(

new_DIR,

target_size=(150,150),

class_mode='categorical',

batch_size=126

)

print(new_generator.class_indices)

print(new_generator.classes)

print(new_generator.num_classes)

print(train_generator.class_indices)

print(train_generator.classes)

print(train_generator.num_classes)

model.evaluate(new_generator)

classes = model.predict(new_generator)

model.predict(new_generator)

np.argmax(model.predict(new_generator), axis=-1)

print(classes)

# output from here on:

print("model evaluate output ", model.evaluate(new_generator))

print("train_generator classes: ", train_generator.classes)

print("new_generator classes: ", new_generator.classes)

print("train_generator class indices: ",train_generator.class_indices)

print("new_generator class indices: ",new_generator.class_indices)

print("model prediction ", model.predict_classes(new_generator))

print("actual values/labels ", new_generator.labels)

print("filenames ", new_generator.filenames)

print("\n manually predict first 4 single paper images: \n ")

path = "/tmp/rps-validation-set/paper/paper-hires1.png"

img = image.load_img(path, target_size=(150, 150))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(path)

print(classes)

path = "/tmp/rps-validation-set/paper/paper-hires2.png"

img = image.load_img(path, target_size=(150, 150))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(path)

print(classes)

path = "/tmp/rps-validation-set/paper/paper1.png"

img = image.load_img(path, target_size=(150, 150))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(path)

print(classes)

path = "/tmp/rps-validation-set/paper/paper2.png"

img = image.load_img(path, target_size=(150, 150))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(path)

print(classes)

print("\n trying different solution \n")

predictions = model.predict(new_generator)

predictions = np.argmax(predictions, axis=-1) #multiple categories

label_map = (train_generator.class_indices)

label_map = dict((v,k) for k,v in label_map.items()) #flip k,v

predictions = [label_map[k] for k in predictions]

print("predictions adjusted ", predictions)

print("actual values ", new_generator.labels)

The relevant output for my problem is as follows (from # output from here on: on):

First I check the performance with model.evaluate, the high recall and low loss shows me that the predictions are (almost) perfect so I would expect no difference between original values/labels and predicted class.

I now want to check/visualize it with showing the predictions against the actual values/labels of the input.

I am not understanding the following:

1.)

Each time I run print("model prediction ", model.predict_classes(new_generator)), so model.predict_classes the output is a different one:

Why? I have a model which is fixed and I plug in some values so I would expect that the predictions are stable. Same holds for print(model.predict(new_generator)), so every time I run it, different output.

2.) The predictions do not match the actual values. I do not understand why and how I can achieve what I want, to match the predictions with the corresponding values and check where the differences are. I thought that maybe the order is a different one and indeed in this post it does mention it and two solutions are provided. The solution with removing the rescaling in the imagegenerator is not a good solution in my eyes. I tried to adjust the following proposed solution:

import numpy as np

predictions = model.predict_generator(self.test_generator)

predictions = np.argmax(predictions, axis=-1) #multiple categories

label_map = (train_generator.class_indices)

label_map = dict((v,k) for k,v in label_map.items()) #flip k,v

predictions = [label_map[k] for k in predictions]

to my code (see "trying different solution" code block):

Output:

But this is wrong / my implementation is wrong.

I also tried the following from this thread:

from glob import glob

class_names = glob("/tmp/rps-validation-set/*") # Reads all the folders in which images are present

class_names = sorted(class_names) # Sorting them

name_id_map = dict(zip(class_names, range(len(class_names))))

But this is not helping.

When I manually predict the first 4 images, the output from model.predict is different, it is correct. [1. 0. 0] is the correct output for all the 4 images. So when I run model.predict / model.predict_classes on a single image / giving the filename it is correct. However, when I run it on a image data generator it is somehow shuffled?

3.) What I am not understanding is the difference between model.predict_classes and model.predict, these two:

print(model.predict_classes(new_generator))

print(model.predict(new_generator))

The predicted probabilities do not match the predicted classes. For example already for the first entry, the largest probability is 9.99e-01, however predicted class is 1. Then for the second entry is does match, so again largest probability is 9.99e-01 and this corresponds to the last class and predicted class is indeed 2. So it seems everything is completely shuffled. When I think about the first images belonging to the first class, so [1 0 0] is correct then I would expect that the higher probability is in the first class (corresponding to the value 0) (it is not) and that predicted class is the first class (corresponding to the value 0) (it is not).

4.) When I run

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

classes2 = model.predict_classes(images, batch_size=10)

print(path)

print(classes)

print(classes2)

I get

How do I get the probabilities? So something like