I too am a new contributor, so please forgive me for any kind of misleadings or incorrect answers.

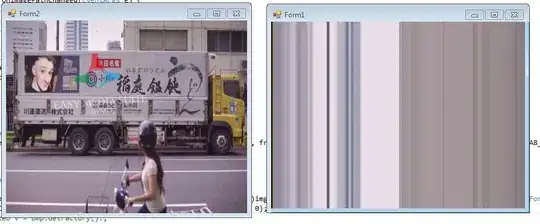

I have tried to extract the text from your image and the results were pretty good.here is the output image with bounding boxes

I have used image_to_data function instead of image_to_string to get the confidence value of each line of text.

Output:

QCCOVARDECRATOBHÍv

CHIBOVZINREVÁVRWTOI # recognized an extra O at the last

VULTOOGCONVOIBORGO

RVMARABILLARRBAGAZ

EARAVARGOQONGUESUSA

V

BSVKOZNAVARAGVÚCTL

AQCOOLLEMREVVCEGAO

ZUVAGOLEBONNABALXL

REODORMOBILEJAHABACQ

EIBBTAODORVICAAOSVR

VRBAONVTVFORÑEBIER

OO0ODEGREELOVCAVRDLA

GBCBTOTBLEOOATXMIAQ

SVALAVANELVOILOVNJ

EOLREBELAROSBTLVAS

VASTORETAVALEARTYW

ADOVNGRAVATAMJREÓ

Í

Still, there are a few incorrect recognitions like the Spanish-U in the 5th line of the image. Tesseract even added a few characters.

Here is the code in python:

custom_oem_psm_config = r'--oem 3 --psm 6'

ocr = pytesseract.image_to_data(otsu, output_type=Output.DICT,config=custom_oem_psm_config,lang='spa')

boxes = len(ocr['text'])

texts = []

for i in range(boxes):

if (int(ocr['conf'][i]) != -1):

(x,y,w,h) = (ocr['left'][i],ocr['top'][i],ocr['width'][i],ocr['height'][i])

cv2.rectangle(img_copy,(x,y),(x+w,y+h),(255,0,0),2)

texts.append(ocr['text'][i])

def list_to_string(list):

str1 = "\n"

return str1.join(list)

string = list_to_string(texts)

print("String: ",string)

Thank you