I am doing experiments on bert architecture and found out that most of the fine-tuning task takes the final hidden layer as text representation and later they pass it to other models for the further downstream task.

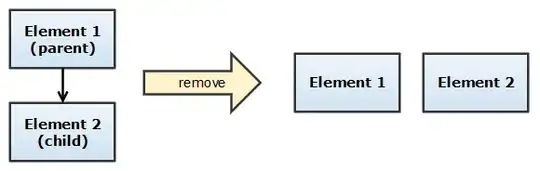

Bert's last layer looks like this :

Where we take the [CLS] token of each sentence :

I went through many discussion on this huggingface issue, datascience forum question, github issue Most of the data scientist gives this explanation :

BERT is bidirectional, the [CLS] is encoded including all representative information of all tokens through the multi-layer encoding procedure. The representation of [CLS] is individual in different sentences.

My question is, Why the author ignored the other information ( each token's vector ) and taking the average, max_pool or other methods to make use of all information rather than using [CLS] token for classification?

How does this [CLS] token help compare to the average of all token vectors?