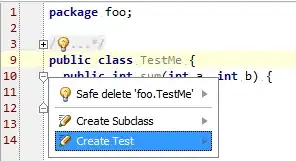

I have a dataframe where each column represents a geographic point, and each row represents a minute in a day. The value of each cell is the flow of water at that point in CFS. Below is a graph of one of these time-flow series.

Basically, I need to calculate the absolute value of the max flow at each of these locations during the day, which in this case would be that hump of 187 cfs. However, there are instabilities, so DF.abs().max() returns 1197 cfs. I need to somehow remove the outliers in the calculation. As you can see, there is no pattern to the outliers, but if you look at the graph, no 2 consecutive points in time should have more than an x% change in flow. I should mention that there are 15K of these points, so the fastest solution is the best.

Anyone know how can I accomplish this in python, or at least know the statistical word for what I want to do? Thanks!