Anaconda/conda as package management tool:

Assuming that you have installed anaconda/conda on your machine, if not follow this - https://docs.anaconda.com/anaconda/install/windows/

conda create --name tensorflow_optimized python=3.7

conda activate tensorflow_optimized

# you need intel's tensorflow version that's optimized to use SSE4.1 SSE4.2 AVX AVX2 FMA

conda install tensorflow-mkl -c anaconda

#run this to check if the installed version is using MKL,

#which in turns uses all the optimizations that your system provide.

python -c "import tensorflow as tf; tf.test.is_gpu_available(cuda_only=False, min_cuda_compute_capability=None)"

# you should see something like this as the output.

2020-07-14 19:19:43.059486: I tensorflow/core/platform/cpu_feature_guard.cc:145] This TensorFlow binary is optimized with Intel(R) MKL-DNN to use the following CPU instructions in performance critical operations: SSE4.1 SSE4.2 AVX AVX2 FMA

To enable them in non-MKL-DNN operations, rebuild TensorFlow with the appropriate compiler flags.

pip3 as package management tool:

py -m venv tensorflow_optimized

.\tensorflow_optimized\Scripts\activate

#once the env is activated, you need intel's tensorflow version

#that's optimized to use SSE4.1 SSE4.2 AVX AVX2 FMA

pip install intel-tensorflow

#run this to check if the installed version is using MKL,

#which in turns uses all the optimizations that your system provide.

py -c "import tensorflow as tf; tf.test.is_gpu_available(cuda_only=False, min_cuda_compute_capability=None)"

# you should see something like this as the output.

2020-07-14 19:19:43.059486: I tensorflow/core/platform/cpu_feature_guard.cc:145] This TensorFlow binary is optimized with Intel(R) MKL-DNN to use the following CPU instructions in performance critical operations: SSE4.1 SSE4.2 AVX AVX2 FMA

To enable them in non-MKL-DNN operations, rebuild TensorFlow with the appropriate compiler flags.

Once you have this, you can set use this env in pycharm.

Before that, run

where python on windows, which python on Linux and Mac when the env is activated, should give you the path for the interpreter. In Pycharm,

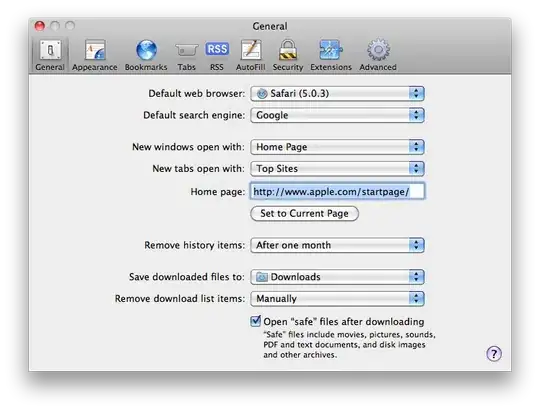

Go to Preference -> Project: your project name -> Project interpreter -> click on settings symbol -> click on add.

Select System interpreter -> click on ... -> this will open a popup window which asks for location of python interpreter.

In the location path, paste the path from where python ->click ok

now you should see all the packages installed in that env.

From Next time, if you want select that interpreter for your project, Click on the lower right half where it says python3/python2 (your interpreter name) and select the one you need.

I'd suggest you to install Anaconda as your default package manager, as it makes your dev life easier wrt python on Windows machine, but you can make do with pip as well.