Hierarchy in RealityKit / Reality Composer

I think it's rather a "theoretical" question than practical. At first I should say that editing Experience file containing scenes with anchors and entities isn't good idea.

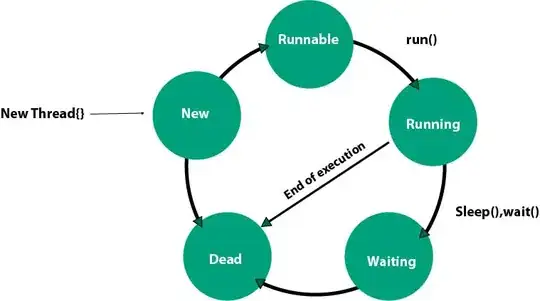

In RealityKit and Reality Composer there's quite definite hierarchy in case you created single object in default scene:

Scene –> AnchorEntity -> ModelEntity

|

Physics

|

Animation

|

Audio

If you placed two 3D models in a scene they share the same anchor:

Scene –> AnchorEntity – – – -> – – – – – – – – ->

| |

ModelEntity01 ModelEntity02

| |

Physics Physics

| |

Animation Animation

| |

Audio Audio

AnchorEntity in RealityKit defines what properties of World Tracking config are running in current ARSession: horizontal/vertical plane detection and/or image detection, and/or body detection, etc.

Let's look at those parameters:

AnchorEntity(.plane(.horizontal, classification: .floor, minimumBounds: [1, 1]))

AnchorEntity(.plane(.vertical, classification: .wall, minimumBounds: [0.5, 0.5]))

AnchorEntity(.image(group: "Group", name: "model"))

Here you can read about Entity-Component-System paradigm.

Combining two scenes coming from Reality Composer

For this post I've prepared two scenes in Reality Composer – first scene (ConeAndBox) with a horizontal plane detection and a second scene (Sphere) with a vertical plane detection. If you combine these scenes in RealityKit into one bigger scene, you'll get two types of plane detection – horizontal and vertical.

Two cone and box are pinned to one anchor in this scene.

In RealityKit I can combine these scenes into one scene.

// Plane Detection with a Horizontal anchor

let coneAndBoxAnchor = try! Experience.loadConeAndBox()

coneAndBoxAnchor.children[0].anchor?.scale = [7, 7, 7]

coneAndBoxAnchor.goldenCone!.position.y = -0.1 //.children[0].children[0].children[0]

arView.scene.anchors.append(coneAndBoxAnchor)

coneAndBoxAnchor.name = "mySCENE"

coneAndBoxAnchor.children[0].name = "myANCHOR"

coneAndBoxAnchor.children[0].children[0].name = "myENTITIES"

print(coneAndBoxAnchor)

// Plane Detection with a Vertical anchor

let sphereAnchor = try! Experience.loadSphere()

sphereAnchor.steelSphere!.scale = [7, 7, 7]

arView.scene.anchors.append(sphereAnchor)

print(sphereAnchor)

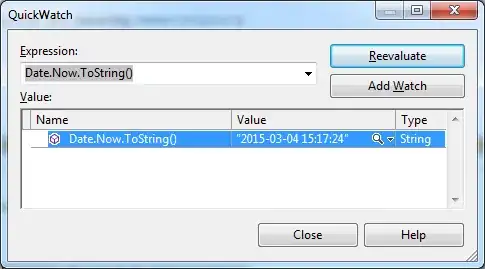

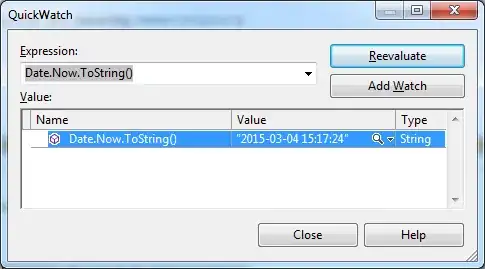

In Xcode's console you can see ConeAndBox scene hierarchy with names given in RealityKit:

And you can see Sphere scene hierarchy with no names given:

And it's important to note that our combined scene now contains two scenes in an array. Use the following command to print this array:

print(arView.scene.anchors)

It prints:

[ 'mySCENE' : ConeAndBox, '' : Sphere ]

You can reassign a type of tracking via AnchoringComponent (instead of plane detection you can assign an image detection):

coneAndBoxAnchor.children[0].anchor!.anchoring = AnchoringComponent(.image(group: "AR Resources",

name: "planets"))

Retrieving entities and connecting them to new AnchorEntity

For decomposing/reassembling an hierarchical structure of your scene, you need to retrieve all entities and pin them to a single anchor. Take into consideration – tracking one anchor is less intensive task than tracking several ones. And one anchor is much more stable – in terms of the relative positions of scene models – than, for instance, 20 anchors.

let coneEntity = coneAndBoxAnchor.goldenCone!

coneEntity.position.x = -0.2

let boxEntity = coneAndBoxAnchor.plasticBox!

boxEntity.position.x = 0.01

let sphereEntity = sphereAnchor.steelSphere!

sphereEntity.position.x = 0.2

let anchor = AnchorEntity(.image(group: "AR Resources", name: "planets")

anchor.addChild(coneEntity)

anchor.addChild(boxEntity)

anchor.addChild(sphereEntity)

arView.scene.anchors.append(anchor)

Useful links

Now you have a deeper understanding of how to construct scenes and retrieve entities from those scenes. If you need other examples look at THIS POST and THIS POST.

P.S.

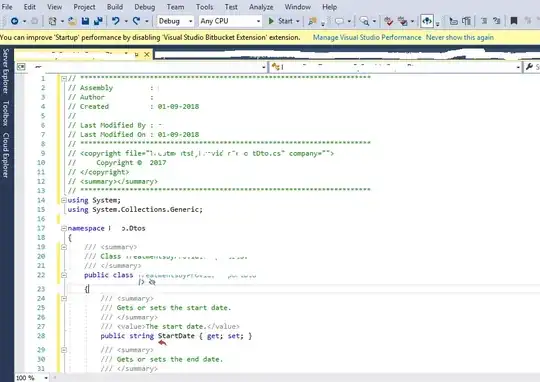

Additional code showing how to upload scenes from ExperienceX.rcproject:

import ARKit

import RealityKit

class ViewController: UIViewController {

@IBOutlet var arView: ARView!

override func viewDidLoad() {

super.viewDidLoad()

// RC generated "loadGround()" method automatically

let groundArrowAnchor = try! ExperienceX.loadGround()

groundArrowAnchor.arrowFloor!.scale = [2,2,2]

arView.scene.anchors.append(groundArrowAnchor)

print(groundArrowAnchor)

}

}