I am running a binary ElasticNet regression cross-validated for 620 000 observations and 21 variables using cv.glmnet in R.

A tibble: 62,905 x 13

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 V13

<dbl> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <fct>

1 -37.8 0 165. 269. 21.9 0.607 84.0 0 65.1 290. 4.36 8 0

2 -68.1 0 303. 168. 44.5 1.41 89.9 0 46.6 296. 0.692 34.7 0

3 -54.3 0 332. 168. 44.5 1.41 89.9 0 46.6 296. 0.692 35.8 1

4 -108. 0 338. 168. 44.5 1.41 89.9 0 46.6 296. 0.692 30.3 0

5 -60.3 0 374. 171. 35.7 2.30 88.9 0.3 51.7 295. 4.01 29.6 1

6 -82.8 0 48.2 133. 18.4 0.210 84.9 0 65.1 289. 1.35 18.7 0

7 -99.6 0 299. 219. 42.6 2.09 90.8 0 34.2 297. 1.42 7 1

8 -98.1 0 116. 153. 44.7 0.988 89.0 0 41.3 298. 0.235 32.6 0

When the cv.glment is done, I predict my new outcome of y. This is now 10-fold higher than my actual observations. Why do I end up with 10 times higher predicted y?

Here is my code:

set.seed(123)

library(caret)

library(tidyverse)

library(glmnet)

library(ROCR)

training.samples <- data$V1 %>% createDataPartition(p = 0.8, list = FALSE)

train <- data[training.samples, ]

test <- data[-training.samples, ]

x.train <- data.frame(train[, names(train) != "V1"])

x.train <- data.matrix(x.train)

y.train <- train$fire

x.test <- data.frame(test[, names(test) != "V1"])

x.test <- data.matrix(x.test)

y.test <- test$fire

> model <- cv.glmnet(x.train, y.train, type.measure = c("auc"), alpha = i/10, family = "binomial", parallel = TRUE)

> predicted1 <- predict(model, s = "lambda.min", newx = x.test)

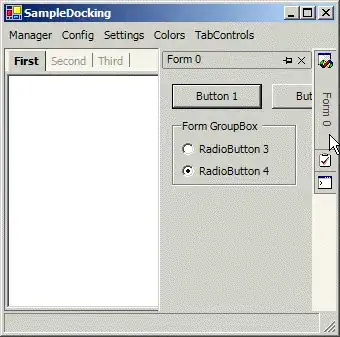

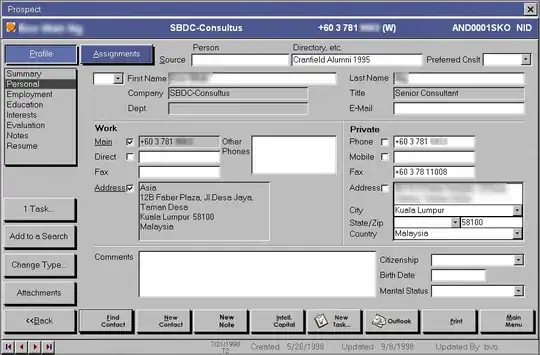

> View(predicted1)

And here are two histograms of my y.test outcome. Sidenote: I do have some 100 1 in my y.test observations but a lot more 0.

What is going wrong in the cross-validation?

EDIT: After @smiling4ever's comment, I succeeded. Hence, I added type = "response"

predicted1 <- predict(model, s = "lambda.min", newx = x.test, type = "response")