I am currently attempting to make my water texture translucent. I have added the following blending parameters:

void Application::initialiseOpenGL() {

printf("Initialising OpenGL context\n");

context = SDL_GL_CreateContext(window);

assertFatal(context != NULL, "%s\n", SDL_GetError());

SDL_GL_SetSwapInterval(0);

glClearColor(0.0, 0.0, 0.0, 1.0);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

glEnable(GL_DEPTH_TEST);

// glEnable(GL_CULL_FACE);

// glCullFace(GL_BACK);

}

My water looks fine from one side:

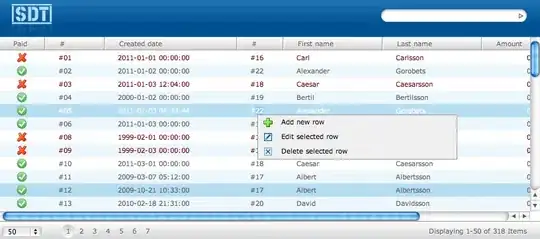

However, from the other side, it seems to overwrite already rendered quads:

This is what underwater looks like:

What could be the issue here?

These are my shaders:

#version 300 es

layout(location = 0) in vec3 position;

layout(location = 1) in float vertexFaceIndex;

layout(location = 2) in vec2 vertexUV;

out float fragmentFaceIndex;

out vec2 fragmentUV;

out float visibility;

uniform mat4 view;

uniform mat4 viewProjection;

uniform float currentTime;

const float fogDensity = 0.005;

const float fogGradient = 5.0;

void main() {

fragmentFaceIndex = vertexFaceIndex;

fragmentUV = vertexUV;

float distance = length(vec3(view * vec4(position, 1.0)));

visibility = exp(-1.0f * pow(distance * fogDensity, fogGradient));

visibility = clamp(visibility, 0.0, 1.0);

if(vertexFaceIndex == 6.0f) {

float yVal = position.y - 0.4 +

min(0.12 * sin(position.x + currentTime / 1.8f) + 0.12 * sin(position.z + currentTime / 1.3f), 0.12);

gl_Position = viewProjection * vec4(vec3(position.x, yVal, position.z), 1.0);

}

else

gl_Position = viewProjection * vec4(position, 1.0);

}

#version 300 es

precision mediump float;

uniform sampler2D atlas;

out vec4 color;

in float fragmentFaceIndex;

in vec2 fragmentUV;

in float visibility;

const float ambientStrength = 1.1f;

const float diffuseStrength = 0.3f;

const vec3 lightDirection = vec3(0.2f, -1.0f, 0.2f);

const vec4 skyColor = vec4(0.612, 0.753, 0.98, 1.0);

const vec3 lightColor = vec3(1.0f, 0.996f, 0.937f);

const vec3 ambientColor = lightColor * ambientStrength;

// Face normals have been manually verified...

const vec3 faceNormals[7] = vec3[7](

vec3(0.0f, 0.0f, 1.0f),

vec3(0.0f, 0.0f, -1.0f),

vec3(0.0f, 1.0f, 0.0f),

vec3(0.0f, -1.0f, 0.0f),

vec3(-1.0f, 0.0f, 0.0f),

vec3(1.0f, 0.0f, 0.0f),

vec3(0.0f, 1.0f, 0.0f)

);

void main() {

vec4 textureFragment = texture(atlas, fragmentUV).rgba;

if(textureFragment.a < 0.5) discard;

float diffuseFactor = max(dot(faceNormals[int(fragmentFaceIndex)], normalize(-1.0f * lightDirection)), 0.0) * diffuseStrength;

vec3 diffuseColor = diffuseFactor * lightColor;

color = vec4((ambientColor + diffuseColor) * textureFragment.rgb, textureFragment.a);

color = mix(skyColor, color, visibility);

}