I want to extract the text content of this PDF: https://www.welivesecurity.com/wp-content/uploads/2019/07/ESET_Okrum_and_Ketrican.pdf

Here is my code:

import os

import re

from io import StringIO

from pdfminer.converter import TextConverter

from pdfminer.pdfinterp import PDFResourceManager, PDFPageInterpreter

from pdfminer.pdfpage import PDFPage

def get_pdf_text(path):

rsrcmgr = PDFResourceManager()

with StringIO() as outfp, open(path, 'rb') as fp:

device = TextConverter(rsrcmgr, outfp)

interpreter = PDFPageInterpreter(rsrcmgr, device)

for page in PDFPage.get_pages(fp, check_extractable=True):

interpreter.process_page(page)

device.close()

text = re.sub('\\s+', ' ', outfp.getvalue())

return text

if __name__ == '__main__':

path = './ESET_Okrum_and_Ketrican.pdf'

print(get_pdf_text(path))

But in the extracted text, some period characters are missing:

is a threat group believed to be operating out of China Its attacks were first reported in 2012, when the group used a remote access trojan (RAT) known as Mirage to attack high-profile targets around the world However, the group’s activities were traced back to at least 2010 in FireEye’s 2013 report on operation Ke3chang – a cyberespionage campaign directed at diplomatic organizations and missions in Europe The attackers resurfaced

It really annoys me, because I'm doing natural language processing on the extracted text, and without the periods the whole document is considered as one big sentence.

I strongly suspect that it's because the /ToUnicode map of the PDF contains bad data, because I had the same problem with PDF.js. I have read this answer that says that whenever the /ToUnicode map of a PDF is bad, there is no way to correctly extract its text without doing OCR.

But I have also been using pdf2htmlEX and PDFium (the PDF renderer of Chrome), and they all work very well to extract all the characters of a PDF (at least for this PDF, that is).

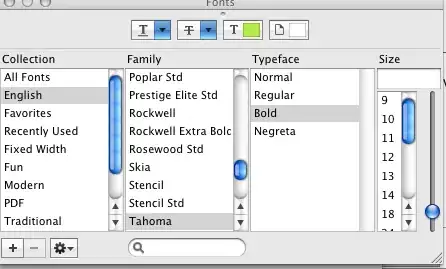

For instance, when I give this PDF to pdf2htmlEX, it detects that the /ToUnicode data is bad and it drops the font for a new one:

So my question is, is it be possible for PDFMiner to use the same feature than pdf2htmlEX and PDFium and that allows to extract correctly all the characters of a PDF even with bad /ToUnicode data?

Thank you for your help.