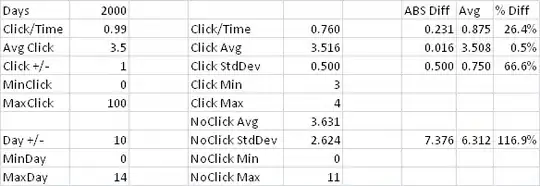

I am trying to make a model which recognises the emotions of a human. My code and RAM is just fine in the start:

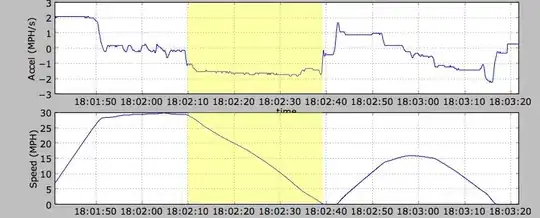

But when I try to normalise my images, the RAM drastically jumps up

and then Colab just crashes:

This is the code block which is causing colab to crash:

import os

import matplotlib.pyplot as plt

import cv2

data = []

for emot in os.listdir('./data/'):

for file_ in os.listdir(f'./data/{emot}'):

img = cv2.imread(f'./data/{emot}/{file_}', 0)

img = cv2.bitwise_not(img)

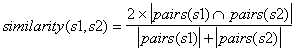

img /= 255.0 # <--- This is the line that causes colab to crash

data.append([img, emotions.index(emot)])

If I remove the img /= 255.0, it doesn't crash, but then I have images which are not normalised!:

I even tried normalising it in another block:

for i in range(len(data)):

data[i][0] = np.array(data[i][0]) / 255.0

but it doesn't work and still crashes