I am trying to understand the tensorflow.keras.layers.SimpleRNN by building a simple digits classifier. The digits of Mnist dataset are of size 28X28. So the main idea is to present each line of the image in a time t. I have seem this idea in some blogs, for instance, this one, where it presents this image:

So my RNN is like this:

units=128

self.model = Sequential()

self.model.add(layers.SimpleRNN(128, input_shape=(28,28)))

self.model.add(Dense(self.output_size, activation='softmax'))

I know that RNN is defined using the following equations:

Parâmetros:

W={w_{hh},w_{xh}} and V={v}.

input vector: x_t.

Update equations:

h_t=f(w_{hh} h_{t-1}+w_{xh} x_t).

y = v h_t.

Questions:

What is exacly "units=128" defining? Is the number of neurons of W_hh, w_xh? Is there anyplace where I can find this information?

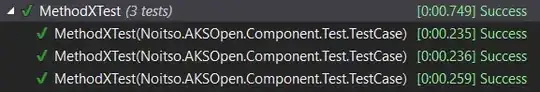

If I run

self.model.summary()

I get

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

simple_rnn (SimpleRNN) (None, 128) 20096

_________________________________________________________________

dense_35 (Dense) (None, 10) 1290

=================================================================

Total params: 21,386

Trainable params: 21,386

Non-trainable params: 0

_________________________

How do I go from the number of units to these numbers of parameters "20096" and "1290"?

- In the case of this example the sequence has always the same size. However, it I am dealing with text, the sequence has variable size. So, what exacly input_shape=(28,28) means? I could not find this information anywhere.