I know there are many questions that are answered on this topic. But I am not getting what they are saying.

Specifically, my question is why the worst case happens when the bottom level is exactly half full and why not in full?

I have browsed through this questions :

Worst case in Max-Heapify - How do you get 2n/3?

worst case in MAX-HEAPIFY: “the worst case occurs when the bottom level of the tree is exactly half full”

But my question is why we use term 'half full' when we can have worst-case when nodes in the tree are at its maximum?

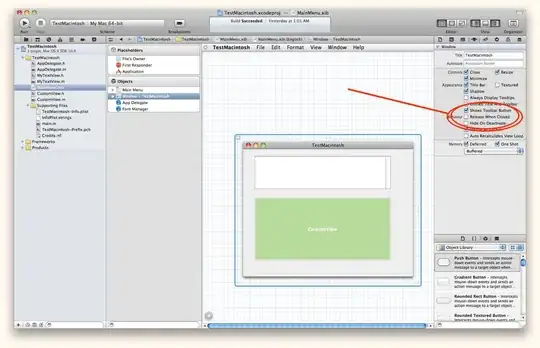

To support my point I have attached an image. Now height of A is 3 and the height of B is also 3. But number of times we call heapify will increase because now A will call heapify for n/2 which is 11/2 ~ 5 and in B we call heapify for 15/2 ~ 7 inside the main loop. Should this be a worst-case?

I am sure that I am somewhere wrong in this intuition but don't know where.