~500M documents That is quite a solid figure, good job if that's true. So here is how I see the solution of the problem:

- If you want to re-write/re-factor and rebuild the DB of an app. You could use versioning pattern.

How does it looks like?

Imagine you have a two collections (or even two databases, if you are using micro service architecture)

Relevant docs / Irrelevant docs.

Basically you could use find only on relevant docs collection (which store 5% of your useful docs) and if there is nothing, then use Irrelevant.find(). This pattern will allows you to store old/historical data. And manage it via TTL index or capped collection.

You could also add some Redis magic to it. (Which uses precisely the same logic), take a look:

This article can also be helpful (as many others, like this SO question)

But don't try to replace Mongo with Redis, team them up instead.

- Using

Indexes and .explain()

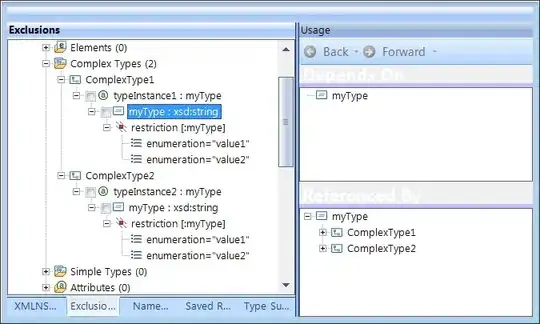

If - for example - I will add another boolean field for each document named "consumed" and index this field. Can I improve the query execution time somehow?

Yes, it will deal with your problem. To take a look, download MongoDB Compass, create this boolean field in your schema, (don't forget to add default value), index the field and then use Explain module with some query. But don't forget about compound indexes! If you create field on one index, measure the performance by queering only this one field.

The result should been looks like this:

If your index have usage (and actually speed-up) Compass will shows you it.

To measure the performance of the queries (with and without indexing), use Explain tab.

Actually, all this part can be done without Compass itself, via .explain and .index queries. But Compass got better visuals of this process, so it's better to use it. Especially since he becomes absolutely free for all.