I'm using Python and PIL (or Pillow) and want to run code on files that contain two pixels of a given intensity and RGB code (0,0,255).

The pixels may also be close to (0,0,255) but slightly adjusted ie (0,1,255). I'd like to overwrite the two pixels closest to (0,0,255) with (0,0,255).

Is this possible? If so, how?

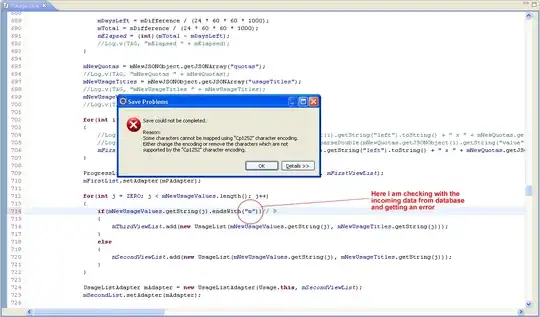

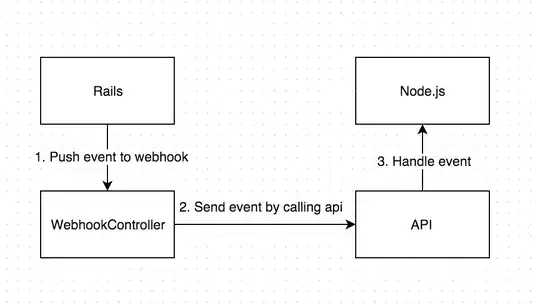

Here's an example image  , here zoomed with the pixels I want to make "more blue" here

, here zoomed with the pixels I want to make "more blue" here

The attempt at code I'm looking at comes from here:

# import the necessary packages

import numpy as np

import scipy.spatial as sp

import matplotlib.pyplot as plt

import cv2

from PIL import Image, ImageDraw, ImageFont

#Stored all RGB values of main colors in a array

# main_colors = [(0,0,0),

# (255,255,255),

# (255,0,0),

# (0,255,0),

# (0,0,255),

# (255,255,0),

# (0,255,255),

# (255,0,255),

# ]

main_colors = [(0,0,0),

(0,0,255),

(255,255,255)

]

background = Image.open("test-small.tiff").convert('RGBA')

background.save("test-small.png")

retina = cv2.imread("test-small.png")

#convert BGR to RGB image

retina = cv2.cvtColor(retina, cv2.COLOR_BGR2RGB)

h,w,bpp = np.shape(retina)

#Change colors of each pixel

#reference :https://stackoverflow.com/a/48884514/9799700

for py in range(0,h):

for px in range(0,w):

########################

#Used this part to find nearest color

#reference : https://stackoverflow.com/a/22478139/9799700

input_color = (retina[py][px][0],retina[py][px][1],retina[py][px][2])

tree = sp.KDTree(main_colors)

ditsance, result = tree.query(input_color)

nearest_color = main_colors[result]

###################

retina[py][px][0]=nearest_color[0]

retina[py][px][1]=nearest_color[1]

retina[py][px][2]=nearest_color[2]

print(str(px), str(py))

# show image

plt.figure()

plt.axis("off")

plt.imshow(retina)

plt.savefig('color_adjusted.png')

My logic is to replace the array of closest RGB colours to only contain (0,0,255) (my desired blue) and perhaps (255,255,255) for white - this way only the pixels that are black, white, or blue come through.

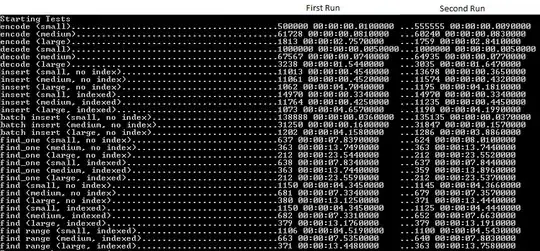

I've run the code on a smaller image, and it converts this  to this

to this  as desired.

as desired.

However, the code runs through every pixel, which is slow for larger images (I'm using images of 4000 x 4000 pixels). I would also like to output and save images to the same dimensions as the original file (which I expect to be an option when using plt.savefig.

If this could be optimized, that would be ideal. Similarly, picking the two "most blue" (ie closest to (0,0,255)) pixels and rewriting them with (0,0,255) should be quicker and just as effective for me.