I have an headerless & unstructured csv file where the first two records conform to a fixed layout but from the third row on wards the column numbers vary.

100,NEM12,202006011242,TCAUSTM,PROGREEN

200,VCCCCB0100,E1K1Q1,001,E1,N1,006668,KWH,15,

300,20200406,122.000,120.000,120.000,122.000,120.000,120.000,120.000,122.000,120.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,120.000,122.000,120.000,120.000,120.000,122.000,120.000,120.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,122.000,120.000,120.000,120.000,120.000,122.000,120.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,F16,28,,20200601113727,

300,20200407,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,4.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,4.000,0.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,2.000,0.000,F16,28,,20200601113727,

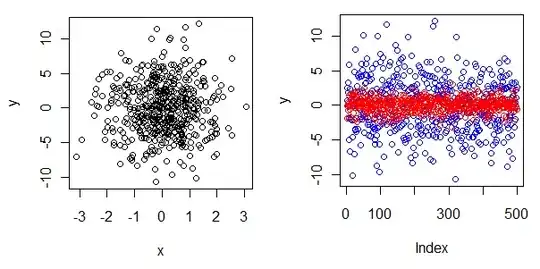

I have tried transposing the rows into columns as suggested here which produces an output as below

But ideally I would process the first two records as two columnar dataframes but transpose the 300 record as above, except that I would like to append VCCCCB0100, E1, 006668 & KWH added from the dataframe of 200 such that the transposed dataframe appears as below for every 300 record. The 300 dataframe borrows a lot of columns from the 200 record.