Do a simple timing:

In [233]: x = np.random.random(100000)

In [234]: timeit np.sort(x)

6.79 ms ± 21 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

In [235]: timeit x[np.argsort(x)]

8.42 ms ± 220 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

In [236]: %%timeit b = np.argsort(x)

...: x[b]

...:

235 µs ± 694 ns per loop (mean ± std. dev. of 7 runs, 1000 loops each)

In [237]: timeit np.argsort(x)

8.08 ms ± 15.3 µs per loop (mean ± std. dev. of 7 runs, 100 loops each)

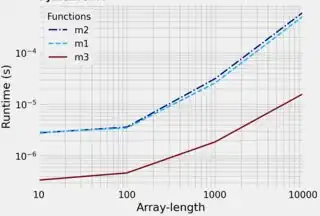

Timing only one size doesn't give O complexity, but it reveals the relative significance of the different steps.

If you don't need the argsort, then sort directly. If you already have b use it rather than sorting again.