The aim is to convert a dataframe with a list column as the data column (and thus with just one timestamp and duration per row) into a time series in long format with a datetimeindex for each single item.

In the result, there is no sequence/list per row for the data anymore, but just one value column.

df_test = pd.DataFrame({'timestamp': [1462352000000000000, 1462352100000000000, 1462352200000000000, 1462352300000000000],

'list': [[1,2,1,9], [2,2,3,0], [1,3,3,0], [1,1,3,9]],

'duration_sec': [3.0, 3.0, 3.0, 3.0]})

tdi = pd.DatetimeIndex(df_test.timestamp)

df_test.set_index(tdi, inplace=True)

df_test.drop(columns='timestamp', inplace=True)

df_test.index.name = 'datetimeindex'

Out:

list duration_sec

datetimeindex

2016-05-04 08:53:20 [1, 2, 1, 9] 3.0

2016-05-04 08:55:00 [2, 2, 3, 0] 3.0

2016-05-04 08:56:40 [1, 3, 3, 0] 3.0

2016-05-04 08:58:20 [1, 1, 3, 9] 3.0

The aim is:

value

datetimeindex

2016-05-04 08:53:20 1

2016-05-04 08:53:21 2

2016-05-04 08:53:22 1

2016-05-04 08:53:23 9

2016-05-04 08:55:00 2

2016-05-04 08:55:01 2

2016-05-04 08:55:02 3

2016-05-04 08:55:03 0

2016-05-04 08:56:40 1

2016-05-04 08:56:41 3

2016-05-04 08:56:42 3

2016-05-04 08:56:43 0

2016-05-04 08:58:20 1

2016-05-04 08:58:21 1

2016-05-04 08:58:22 3

2016-05-04 08:58:23 9

Mind that this means not just to take 1 second for each item; this was just taken to simplify the example. Instead, it is about 4 items in a sequence that has a given duration of, for example, 3.0 seconds (which may also vary from row to row), and where the first item of each sequence always starts at "time 0", meaning that the seconds per item should be calculated like

[3.0 sec / (4-1) items] = 1 sec.

Context:

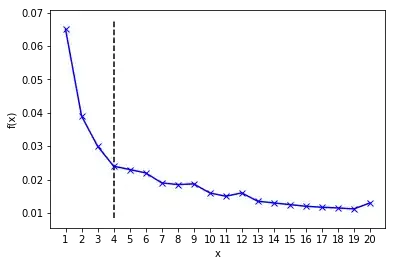

The example shows conversion to Datetimeindex since this makes it suitable for seasonal_decompose(), see this the first search hit.

There, the resulting df looks like this:

df_test2 = pd.read_csv('https://raw.githubusercontent.com/selva86/datasets/master/a10.csv', parse_dates=['date'], index_col='date')

Out:

value

date

1991-07-01 3.526591

1991-08-01 3.180891

1991-09-01 3.252221

1991-10-01 3.611003

1991-11-01 3.565869

...

2008-02-01 21.654285

2008-03-01 18.264945

2008-04-01 23.107677

2008-05-01 22.912510

2008-06-01 19.431740

[204 rows x 1 columns]

And then it is easy to apply a seasonal_decompose() via additive decomposition model:

result_add = seasonal_decompose(df_test2['value'], model='additive', extrapolate_trend='freq')

# Plot

plt.rcParams.update({'figure.figsize': (5,5)})

result_add.plot().suptitle('Additive Decompose', fontsize=22)

plt.show()

Now the same is needed for the df_test above.