We have a database created on SQL Server 2017 (Express edition) on one of our servers and we are trying to move this database to our another server which has SQL Server 2014 (licensed version) installed on it. We have tried to restore the backup, detach/attach database files and also tried generating the script and running them on new server.

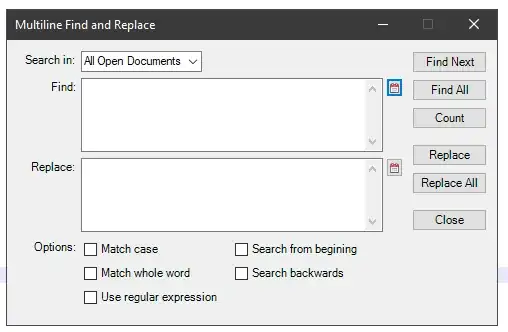

But unfortunately we are not able to restore the database. For backup/restore, we are getting following error message.

For generating scripts and running them on new server; the problem is that the file size of the scripts is around 3.88 GB. We are not able to edit this file before executing it because file size is too large. We have also tried to first generate scripts without data and then with data but the file size with only Data comes up to 3.88 GB (there is only very little difference when only Data is selected).

What are the options we have?