I am looking to optimize a piece of code that takes each row in one dataframe, and searches if those values exist in any row of a second dataframe. I then append the second dataframe rows to a list, followed by appending the matched rows of the first dataframe. The rows that are not found in the second dataframe should not be appended in the list, demonstrated by the code below.

I have written code to do this, but the problem is that it doesn't run fast enough with larger dataframes with millions of rows each. Is there any way to speed this up? I have tried incorporating itertuples and other methods to speed up the search but it still runs slow.

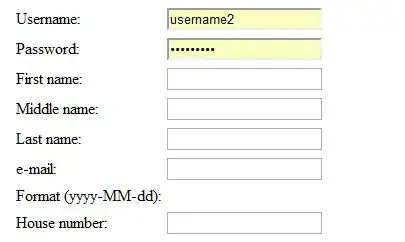

This is what the first dataframe looks like:

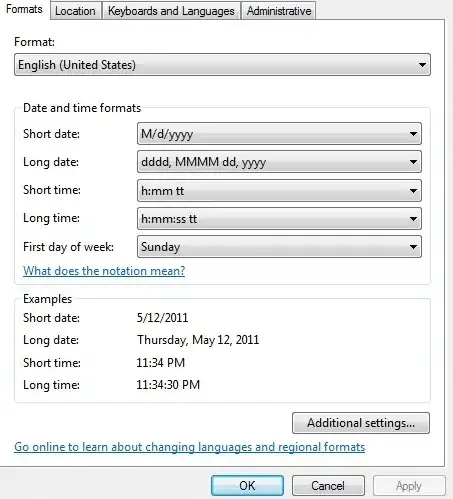

And this is what the second dataframe looks like:

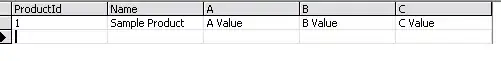

The final dataframe that would be produced by the code below following the logic described would look like this. As you can see, the first dataframe at (column name) 'index' 1 shares no values with the second dataframe, and has no rows from the second dataframe in the index position underneath it, whereas (column name) 'index' 2 found a match with the last row in the second dataframe:

import pandas as pd

import numpy as np

df1 = pd.DataFrame({"A": ["a", "b", "c", "d"], "B": ["e", "f", "g", "h"], "C": ["z", "l", "r", "s"], "index": [1, 2, 3, 4]})

df2 = pd.DataFrame({"A": ["q", "r", "c", "b"], "B": ["a", "b", "c", "d"], "C": ["g", "g", "g", "g"]})

g = []

for row in df1.itertuples():

g.append(df1.loc[[row.Index]])

g.append(df2.loc[

(df2.A.values == row.A) |

(df2.B.values == row.B) |

(df2.C.values == row.C)

])

pd.concat(g)