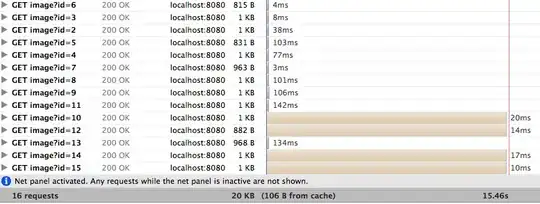

The serial version takes less time than the parallel one.

/*Serial Version*/

double start = omp_get_wtime();

for (i = 0; i < 1100; i++) {

for (j = i; j < i + 4; j++) {

fprintf(new_file, "%f ", S[j]);

}

fprintf(new_file, "\n");

m = compute_m(S + i, 4);

find_min_max(S + i, 4, &min, &max);

S_i = inf(m, min, b);

S_s = sup(m, max, b);

if (S[i + 2] < S_i)

Res[i] = S_i;

else if (S[i + 2] > S_s)

Res[i] = S_s;

else

Res[i] = ECG[i + 2];

fprintf(output_f, "%f\n", Res[i]);

}

double end = omp_get_wtime();

printf("\n ------------- TIMING :: Serial Version -------------- ");

printf("\nStart = %.16g\nend = %.16g\nDiff_time = %.16g\n", start, end, end - start);

#Parallel version

double start = omp_get_wtime();

#pragma omp parallel for

for (i = 0; i < 1100; i++) {

#pragma omp parallel for

for (j = i; j < i + 4; j++) {

serial code ...

}

serial code ...

}

double end = omp_get_wtime();

printf("\n ------------- TIMING :: Serial Version -------------- ");

printf("\nStart = %.16g\nend = %.16g\nDiff_time = %.16g\n", start, end, end - start);

I have tried multiple times, the serial execution is always faster why?

why is serial execution faster here? am I calculation the execution time in the right way?