On my windows machine, I am trying to use spark 2.4.6 without hadoop using - spark-2.4.6-bin-without-hadoop-scala-2.12.tgz

After setting the SPARK_HOME, HADOOP_HOME and also SPARK_DIST_CLASSPATH with information from the post linked here

when i try to start the spark-shell, I get this error -

Error: A JNI error has occurred, please check your installation and try again

Exception in thread "main" java.lang.NoClassDefFoundError: org/slf4j/Logger

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2701)

at java.lang.Class.privateGetMethodRecursive(Class.java:3048)

at java.lang.Class.getMethod0(Class.java:3018)

at java.lang.Class.getMethod(Class.java:1784)

at sun.launcher.LauncherHelper.validateMainClass(LauncherHelper.java:544)

at sun.launcher.LauncherHelper.checkAndLoadMain(LauncherHelper.java:526)

Caused by: java.lang.ClassNotFoundException: org.slf4j.Logger

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 7 more

The link referenced above seems and many others point to SPARK_DIST_CLASSPATH , but I already have this in my system variables as -

$HADOOP_HOME;$HADOOP_HOME\etc\hadoop*;$HADOOP_HOME\share\hadoop\common\lib*;$HADOOP_HOME\share\hadoop\common*;$HADOOP_HOME\share\hadoop\hdfs*;$HADOOP_HOME\share\hadoop\hdfs\lib*;$HADOOP_HOME\share\hadoop\hdfs*;$HADOOP_HOME\share\hadoop\yarn\lib*;$HADOOP_HOME\share\hadoop\yarn*;$HADOOP_HOME\share\hadoop\mapreduce\lib*;$HADOOP_HOME\share\hadoop\mapreduce*;$HADOOP_HOME\share\hadoop\tools\lib*;

I also have this line in the spark-env.sh of the spark -

export SPARK_DIST_CLASSPATH=$(C:\opt\spark\hadoop-2.7.3\bin\hadoop classpath)

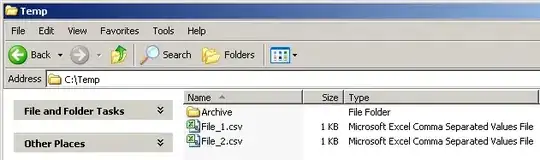

HADOOP_HOME = C:\opt\spark\hadoop-2.7.3

SPARK_HOME = C:\opt\spark\spark-2.4.6-bin-without-hadoop-scala-2.12

When I tried the spark 2.4.5 that came with hadoop seems to work just fine. This tells me there is something wrong with the way I have my hadoop set up. What am I missing here? Thanks!