Horrible Workaround

I've reproduced your issue here, and so far the only way I've got it to work is to replace the ü with a json unicode escape sequence:

$data = @{

properties = @{

confirmation = "invite"

firstName = "Max"

lastName = "Müstermann"

email = "max.mustermann@example.org"

appType = "developerPortal"

}

}

$json = $data | ConvertTo-Json -Compress;

# vvvvvvvvvvvvvvvvvvvvvv

$json = $json.Replace("ü", "\u00FC"); # <----- replace ü with escape sequence

# ^^^^^^^^^^^^^^^^^^^^^^

Set-Content -Path $tmpfile -Value $json;

$requestUri = "https://management.azure.com/" +

"subscriptions/$subscriptionId/" +

"resourceGroups/$resourceGroupName/" +

"providers/Microsoft.ApiManagement/" +

"service/$serviceName/" +

"users/$username" +

"?api-version=2019-12-01";

az rest --method put --uri $requestUri --body @$tmpFile

which gives:

I know that's not a very satisfying solution though because it doesn't solve the general case of all non-ascii characters, and you're hacking around with the converted json which might break things in weird edge cases.

One extreme option might be to write your own json serializer that escapes the appropriate characters - I'm not saying that's a good idea, but it would potentially work. If you think about going down that route here's a raw PowerShell serializer I wrote a while ago for a very niche use case that you could use as a starting point and tweak the string serialization code to escape the appropriate characters - ConvertTo-OctopusJson.ps1

Hopefully that might be enough to unblock you or at least give you options to think about...

More Info

For anyone else investigating this issue, here's a few pointers:

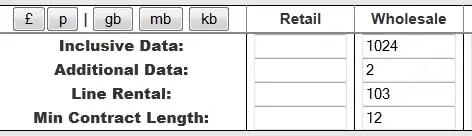

- You can get

az to send requests via Fiddler if you set the following environment variables:

:: https://stackoverflow.com/questions/20500613/how-to-set-a-proxy-for-the-azure-cli-command-line-tool

set HTTPS_PROXY=https://127.0.0.1:8888

:: https://stackoverflow.com/questions/55463706/ssl-handshake-error-with-some-azure-cli-commands

set ADAL_PYTHON_SSL_NO_VERIFY=1

set AZURE_CLI_DISABLE_CONNECTION_VERIFICATION=1

- It looks like the

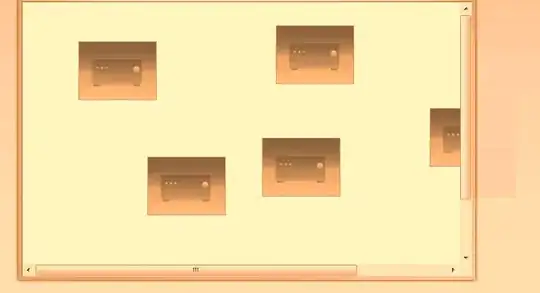

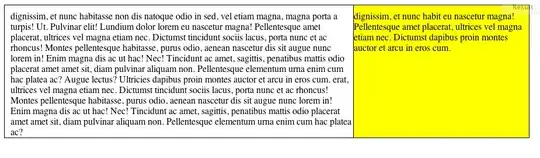

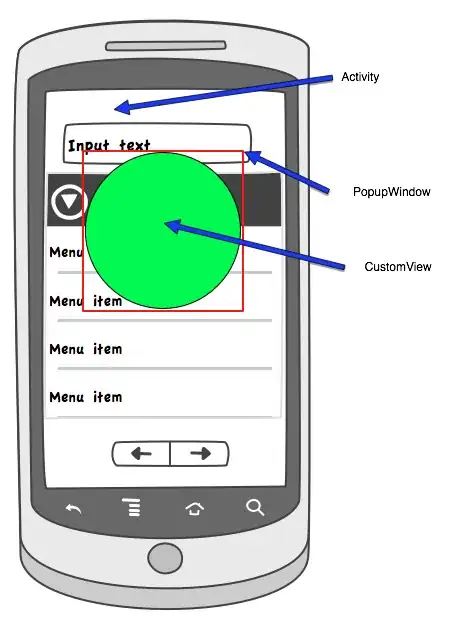

--body @$tmpfile parameter doesn't send the file as a binary blob. Instead, it reads the file as text and re-encodes it as ISO-8859-1 (the default encoding for HTTP). For example, if the file is UTF8-encoded with ü represented as C3 BC:

the file content still gets converted to in ISO-8859-1 encoding with ü represented as FC when az sends the HTTP request:

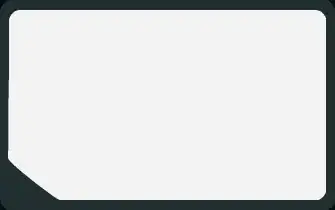

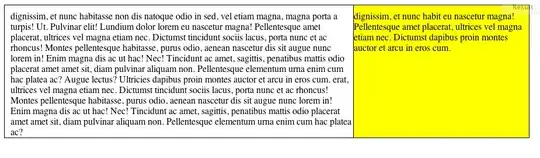

- The response comes back as this:

where � (EF BF BD) is the UTF8 representation of the Unicode "REPLACEMENT CHARACTER":

- My guess is that the client-side is sending he body as ISO-8859-1 but the server-side is treating it as UTF8. The server fails to decode the ISO-8859-1 byte

FC as ü, and replaces it with the "REPLACEMENT CHARACTER" instead, and finally encodes the response as UTF8.

This can be simulated as follows:

# client sends request encoded with iso-8859-1

$requestText = "Müstermann";

$iso88591 = [System.Text.Encoding]::GetEncoding("iso-8859-1");

$requestBytes = $iso88591.GetBytes($requestText);

write-host "iso-8859-1 bytes = '$($requestBytes | % { $_.ToString("X2") })'";

# iso-8859-1 bytes = '4D FC 73 74 65 72 6D 61 6E 6E'

# server decodes request with utf8 instead

$utf8 = [System.Text.Encoding]::UTF8;

$mangledText = $utf8.GetString($requestBytes);

write-host "mangled text = '$mangledText'";

# mangled text = 'M�stermann'

# server encodes the response as utf8

$responseBytes = $utf8.GetBytes($mangledText);

write-host "response bytes = '$($responseBytes | % { $_.ToString("X2") })'";

# response bytes = '4D EF BF BD 73 74 65 72 6D 61 6E 6E'

which seems to match up with the bytes in the screenshots, so it looks like it might be a bug in the Azure API Management API.