Overview

By using Scikit-Learn library, one can consider different Decision Trees to forecast data. In this example, we'll be using an AdaBoostRegressor, but alternatively, one can switch to RandomForestRegressor or any other tree available. Thus, by choosing trees one should we aware of removing the trend to the data, in this way, we illustrate the example of controlling the mean and variance of the time series by differencing and applying logarithm transform respectively to the data.

Preparing the data

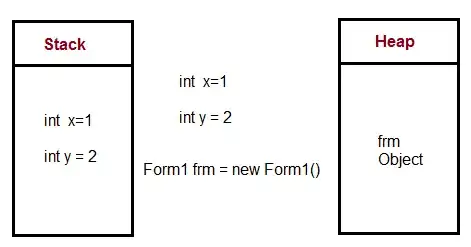

A time series has two basic components, it's mean and it's variance. Ideally, we would like to control this components, for the variability, we can simply apply a logarithm transformation on the data, and for the trend we can differentiate it, we will see this later.

Additionally, for this approach, we're considering that the actual value y_t can be explained by the two prior value y_t-1 and y_t-2. You can play around with these lags values by modifying the input to the range function.

# Load data

tsdf = pd.read_csv('tsdf.csv', sep="\t")

# For simplicity I just take the target variable and the date

tsdf = tsdf[['sales_index', 'dates']]

# Log value

tsdf['log_sales_index'] = np.log(tsdf.sales_index)

# Add previous n-values

for i in range(3):

tsdf[f'sales_index_lag_{i+1}'] = tsdf.sales_index.shift(i+1)

# For simplicity we drop the null values

tsdf.dropna(inplace=True)

tsdf[f'log_sales_index_lag_{i+1}'] = np.log(tsdf[f'sales_index_lag_{i+1}'])

tsdf[f'log_difference_{i+1}'] = tsdf.log_sales_index - tsdf[f'log_sales_index_lag_{i+1}']

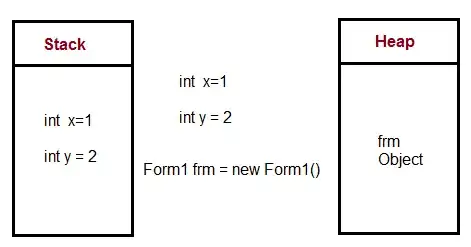

Once our data is ready, we get to a result similar to the one on the image below.

Is data stationary?

To control the mean component of the time series, we should perform some differencing on the data. In order to determine whether this step is required we can perform a unit root test. There are several test for this that make different assumptions, a list of some unit root tests can be found here.

For simplicity, we're going to consider the KPSS test, by this we assumme as null hypothesis that the data is stationary, particularly, it assumess stationarity around a mean or a linear trend.

from arch.unitroot import KPSS

# Test for stationary

kpss_test = KPSS(tsdf.sales_index)

# Test summary

print(kpss_test.summary().as_text())

We see that the P-value = .280 is greater than the usual convention of 0.05. Therefore, we would need to apply a first difference to the data. As a side note, one can iteratively perform this test to know how many differences should be applied to the data.

In the graph below, we see a comparison of the original data vs the log first difference of it, notice there is a sudden change in these last values of the time series, this appears to be a structural change but we are not going to deep dive on it. If you want to dig more on this topic these slides from Bruce Hansen are useful.

plt.figure(figsize=(12, 6))

plt.subplot(1,2,1)

plt.plot(tsdf.sales_index)

plt.title('Original Time Series')

plt.subplot(1,2,2)

plt.plot(tsdf.log_difference_1)

plt.title('Log first difference Time Series')

Decision Tree Model

As we've previously said, we're considering Decision Tree Models, when using them one should be aware of removing the trend from the time series. For example, if you have an upward trend, tress are not good at predicting a downward trend. In the code example below, I've choose the AdaBoostRegressor, but you're free to choose other tree model. Additionnaly, notice that log_difference_1 is consider to be explained by log_difference_2 and log_difference_3.

Note. Your dataset has other covariates as aus_avg_rain or slg_adt_ctl, so to consider them for predicting you could apply as well lag values on them.

from sklearn.model_selection import train_test_split

from sklearn.ensemble import AdaBoostRegressor

# Forecast difference of log values

X, Y = tsdf[['log_difference_2', 'log_difference_3']], tsdf['log_difference_1']

# Split in train-test

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, shuffle=False, random_state=0)

# Initialize the estimator

mdl_adaboost = AdaBoostRegressor(n_estimators=500, learning_rate=0.05)

# Fit the data

mdl_adaboost.fit(X_train, Y_train)

# Make predictions

pred = mdl_adaboost.predict(X_test)

test_size = X_test.shape[0]

Evaluating the prediction

test_size = X_test.shape[0]

plt.plot(list(range(test_size)), np.exp(tsdf.tail(test_size).log_sales_index_lag_1 + pred), label='predicted', color='red')

plt.plot(list(range(test_size)), tsdf.tail(test_size).sales_index, label='real', color='blue')

plt.legend(loc='best')

plt.title('Predicted vs Real with log difference values')

It seems that Decision Tree model is accurately predicting the real values. However, to evaluate the model performance we should consider an evaluation metric, a good intro to this topic can be found on this article, feel free to pick the one that is more convenient to your approach. I'm just going to go with the TimeSeriesSplit function from scikit-learn to assess model's error through the mean absolute error.

from sklearn.model_selection import TimeSeriesSplit

from sklearn.metrics import mean_absolute_error

X, Y = np.array(tsdf[['log_difference_2', 'log_difference_3']]), np.array(tsdf['log_difference_1'])

# Initialize a TimeSeriesSplitter

tscv = TimeSeriesSplit(n_splits=5)

# Retrieve log_sales_index and sales_index to unstransform data

tsdf_log_sales_index = np.array(tsdf.copy().reset_index().log_sales_index_lag_1)

tsdf_sales_index = np.array(tsdf.copy().reset_index().sales_index_lag_1)

# Dict to store metric value at every iteration

metric_iter = {}

for idx, val in enumerate(tscv.split(X)):

train_i, test_i = val

X_train, X_test = X[train_i], X[test_i]

Y_train, Y_test = Y[train_i], Y[test_i]

# Initialize the estimator

mdl_adaboost = AdaBoostRegressor(n_estimators=500, learning_rate=0.05)

# Fit the data

mdl_adaboost.fit(X_train, Y_train)

# Make predictions

pred = mdl_adaboost.predict(X_test)

# Unstransform predictions

pred_untransform = [np.exp(val_test + val_pred) for val_test, val_pred in zip(tsdf_log_sales_index[test_i], pred)]

# Real value

real = tsdf_sales_index[test_i]

# Store metric

metric_iter[f'iter_{idx + 1}'] = mean_absolute_error(real, pred_untransform)

Now we see that the average MAE error is quite low.

print(f'Average MAE error: {np.mean(list(metric_iter.values()))}')

>>> Average MAE error: 17.631090959806535