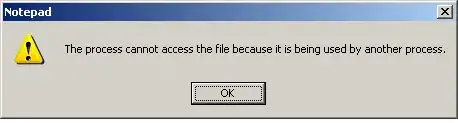

I'm trying to fit a exponential function by using scipy.optimize.curve_fit()(The example data and code as following). But it always shows a RuntimeError like this: RuntimeError: Optimal parameters not found: Number of calls to function has reached maxfev = 5000. I'm not sure where I'm going wrong.

import numpy as np

from scipy.optimize import curve_fit

x = np.arange(-1, 1, .01)

param1 = [-1, 2, 10, 100]

fit_func = lambda x, a, b, c, d: a * np.exp(b * x + c) + d

y = fit_func(x, *param1)

popt, _ = curve_fit(fit_func, x, y, maxfev=5000)