I am practicing vectorization with Pandas, and I discovered a counter-intuitive case when using a chain of built-in vectorized methods is slower than applying a naive-Python function (to extract the first digit of all numbers in a Series):

import sys

import numpy as np

import pandas as pd

s = pd.Series(np.arange(100_000))

def first_digit(x):

return int(str(x)[0])

s.astype(np.str).str[0].astype(np.int) # 218ms "built-in"

s.apply(first_digit) # 104ms "apply"

s.map(first_digit) # 104ms "map"

np.vectorize(first_digit)(s) # 78ms "vectorized"

All 4 implementations produce the same Pandas Series and I completely understand that the vectorized function call might be faster than the per-element apply/map.

However, I am puzzled why using the buil-in methods is slower... While I would be interested in an actual answer too, I am more interested in what is the smallest set of tools I have to learn to be able evaluate my hypothesis about the performance.

My hypothesis is that the chain of method calls is creating 2 extra inter-mediate Pandas Series, and the values of those Series are evaluated greedily, causing CPU cache misses (having to load the inter-mediate Series from RAM).

Following steps in that hypothesis I have no idea how to confirm or falsify:

- are the inter-mediate Series / numpy arrays evaluated greedily or lazily?

- would it cause CPU cache misses?

- what other explanations do I need to consider?

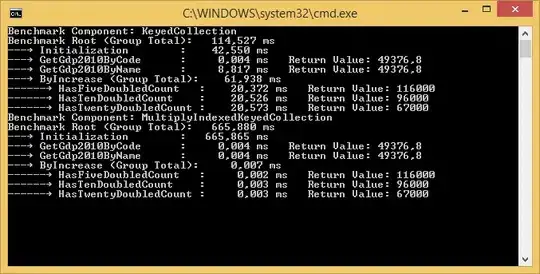

Screenshot of my measurements: