I have data with an id and a value, like this:

df = pd.DataFrame({

"id": [1,1,1,1,2,2,2,3,3],

"value": ["a", "a", "a", "b", "a", "b", "c", "c", "c"]

})

df

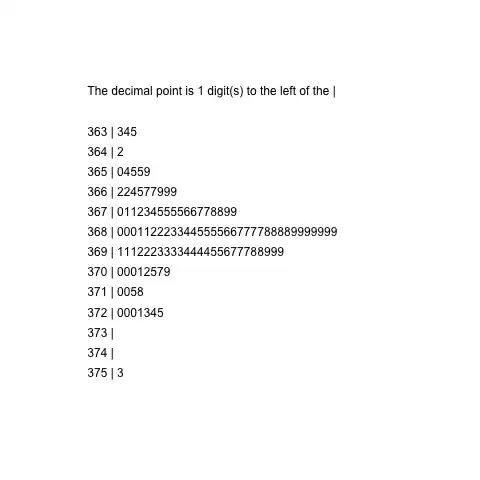

As seen above, the id can occure multiple times. I want to aggregate the data from each id into one row, to get something like this:

I have code that accomplishes that in two steps. First I add the count to each row:

counts = df.groupby("id")["value"].value_counts()

def get_counts(x, instance):

try:

return counts.loc[x["id"], instance]

except:

return 0

for i in df["value"].unique():

df["value_count_" + i] = df.apply(lambda x: get_counts(x, i), axis=1)

df

Then I drop the value column and then the duplicates:

df.drop(columns="value").drop_duplicates()

However, although this works, it takes a lot of time for larger datasets and I'm sure there is a more efficient way to get the counts in the first step.

Any ideas?

Thanks in advance!