Note: When you installed libraries via Jars, Maven, PyPI, those are located in the folderpath dbfs:/FileStore.

- For Interactive cluster Jars located at -

dbfs:/FileStore/jars

- For Automated cluster Jars located at -

dbfs:/FileStore/job-jars

There are couple of ways to download an installed dbfs jar file from databricks cluster to local machine.

GUI Method: You can use DBFS Explorer

DBFS Explorer was created as a quick way to upload and download files to the Databricks filesystem (DBFS). This will work with both AWS and Azure instances of Databricks.

You will need to create a bearer token in the web interface in order to connect.

Step1: Download DBFS explorer from Here:https://datathirst.net/projects/dbfs-explorer and install.

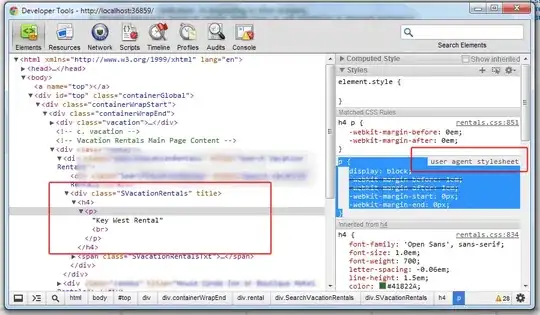

Step2: How to create a bearer token?

Click the user profile icon User Profile in the upper right corner of

your Databricks workspace.

Click User Settings.

Go to the Access Tokens tab.

Click the Generate New Token button.

Note: Copy the generated token and store in a secure location.

Step3: Open DBFS explorer for Databricks and Enter Host URL and Bearer Token and continue.

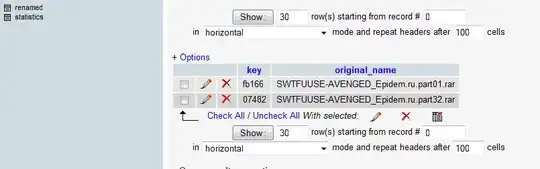

Step4: Navigate to the DBFS folder named FileStore => jars => Select the jar which you want to download and click download and select the folder on the local machine.

CLI Method: You can use Databricks CLI

Step1: Install the Databricks CLI, configure it with your Databricks credentials.

Step2: Use the CLI "dbfs cp" command used to Copy files to and from DBFS.

Syntax: dbfs cp <SOURCE> <DESTINATION>

Example: dbfs cp "dbfs:/FileStore/azure.txt" "C:\Users\Name\Downloads\"