I'm creating hadoop cluster using yarn configuration, i have 2 VMs from virtual box, but when i run the command start-all.sh (start-dfs.sh and start-yarn.sh), i get a possitive anwser with jps both on master and slave terminal, but when i access master-ip:9870 on web there is no datanode started

core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-master:9000</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoopuser/hadoop/data/nameNode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoopuser/hadoop/data/dataNode</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<property>

<name>yarn.acl.enable</name>

<value>0</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

workers

hadoop-slave1

/etc/hosts

master-ip hadoop-master

slave-ip hadoop-slave1

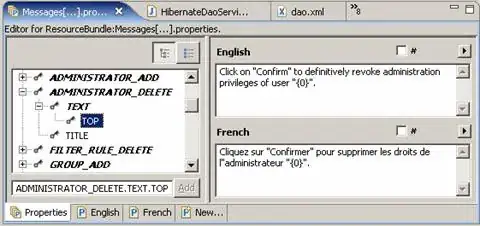

The configuration above is in both master and slave machine.

I also have the JAVA_HOME, HADOOP_HOME and PDSH_RCMD_TYPE in my .bashrc. And i have created the ssh key in master and shared it with the slave authorized for allows ssh connection.

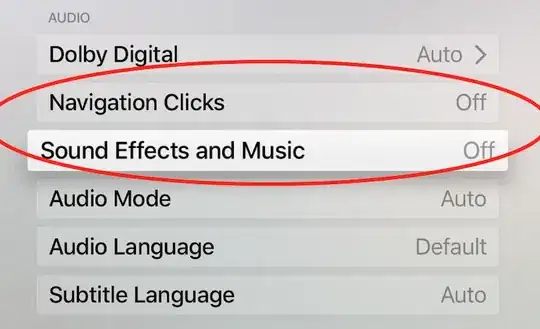

In master machine i have this output:

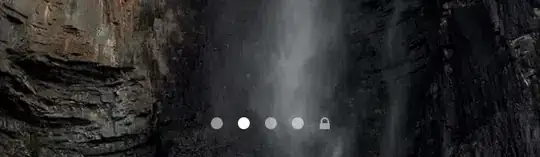

In my slave machine:

I have 0 nodes in my hdfs web visualization:

But i can see the slave node in yarn configuration:

I deleted hadoop tmp files and the datanode folders before format my hdfs on master, and start all processes. I'm using hadoop 3.2.1