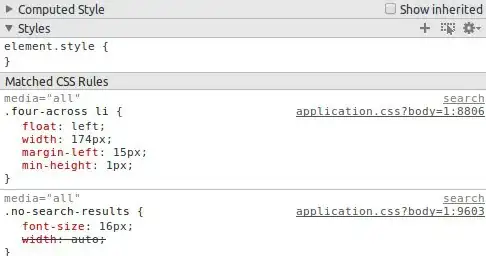

One approach is using template-matching

If you use the first image as a template:

Source image:

Result will be:

Steps:

-

- Convert both

template and source images to the gray-scale and apply Canny edge detection.

-

template = cv2.imread("template_resized.jpg")

template = cv2.cvtColor(template, cv2.COLOR_BGR2GRAY)

template = cv2.Canny(template, 50, 200)

-

source = cv2.imread("source_resized.jpg")

source = cv2.cvtColor(source, cv2.COLOR_BGR2GRAY)

source = cv2.Canny(source, 50, 200)

-

- Check whether the template matches the source image

result = cv2.matchTemplate(source, template, cv2.TM_CCOEFF)

We need maximum-value and maximum-value location from the result

(_, maxVal, _, maxLoc) = cv2.minMaxLoc(result)

-

- Get coordinates and draw the rectangle

-

(startX, startY) = (int(maxLoc[0] * r), int(maxLoc[1] * r))

(endX, endY) = (int((maxLoc[0] + w) * r), int((maxLoc[1] + h) * r))

Draw Rectangle

-

cv2.rectangle(image, (startX, startY), (endX, endY), (0, 0, 255), 2)

Possible Question: Why didn't you use the original image size?

Answer: Well, template-matching works better for small images. Otherwise the result is not satisfactory. If I use the original image size the result will be: link

Possible Question: Why did you use cv2.TM_CCOEFF?

Answer: It was just an example, you can experiment with the other parameters

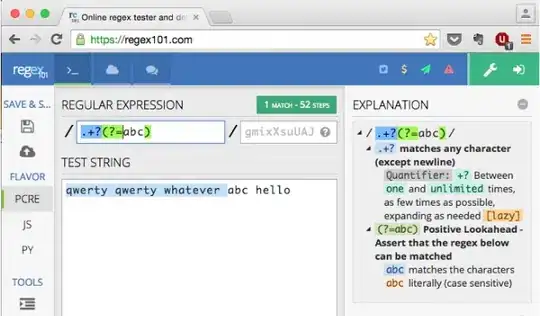

Possible Question: How do I calculate the similarity-percentage using template-matching?

Answer: Please look at this answer. As stated you can use the minMaxLoc's output for similarity percentage.

For full code, please look at the opencv-python tutorial.

The second photo is a Sacred object (A) photo capture by Phone Camera.

The second photo is a Sacred object (A) photo capture by Phone Camera.