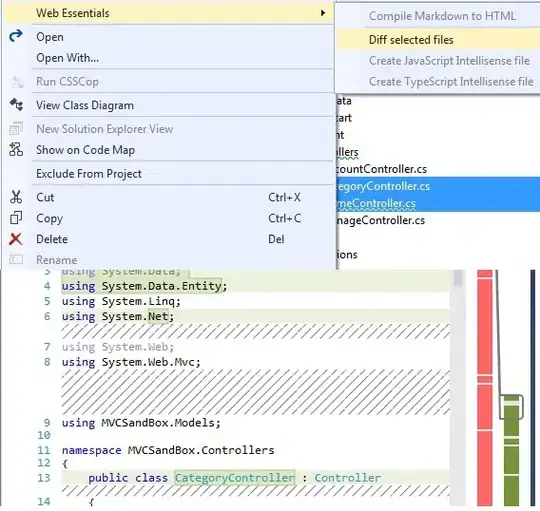

I'm doing a finetuning of a Longformer on a document text binary classification task using Huggingface Trainer class and I'm monitoring the measures of some checkpoints with Tensorboard.

Even if the F1 score and accuracy is quite high, I have perplexities about the fluctuations of training loss.

I read online a reason for that can be:

- the too high learning rate, but I tried with 3 values (1e-4, 1e-5 and 1e-6) and all of them made the same effect

- a small batch size. I'm using a Sagemaker notebook p2.8xlarge which has 8xK80 GPUs. The batch size per GPU I can use to avoid the CUDA out of memory error is 1. So the total batch size is 8. My intuition is that a bs of 8 is too small for a dataset containing 57K examples (7K steps per epoch). Unfortunately it's the highest value I can use.

Here I have reported the trend of F1, accuracy, loss and smoothed loss. The grey line is with 1e-6 of learning rate while the pink one is 1e-5.

I reasume all the info of my training:

- batch size: 1 x 8GPU = 8

- learning rate: 1e-4, 1e-5, 1e-6 (all of them tested without improvement on loss)

- model: Longformer

- dataset:

- training set: 57K examples

- dev set: 12K examples

- test set: 12K examples

Which could be the reason? Can this be considered a problem despite the quite good F1 and accuracy results?