The method pandas.read_excel does not support using wasbs or abfss scheme URL to access the file. For more details, please refer to here

So if you want to access the file with pandas, I suggest you create a sas token and use https scheme with sas token to access the file or download the file as stream then read it with pandas. Meanwhile, you also mount the storage account as filesystem then access file as @CHEEKATLAPRADEEP-MSFT said.

For example

create sas token via Azure portal

Code

pdf=pd.read_excel('https://<account name>.dfs.core.windows.net/<file system>/<path>?<sas token>')

print(pdf)

- Download the file as stream and read the file

Install package azure-storage-file-datalake and xlrd with pip in databricks

Code

import io

import pandas as pd

from azure.storage.filedatalake import BlobServiceClient

from azure.storage.filedatalake import DataLakeServiceClient

blob_service_client = DataLakeServiceClient(account_url='https://<account name>.dfs.core.windows.net/', credential='<account key>')

file_client = blob_service_client.get_file_client(file_system='test', file_path='data/sample.xlsx')

with io.BytesIO() as f:

downloader =file_client.download_file()

b=downloader.readinto(f)

print(b)

df=pd.read_excel(f)

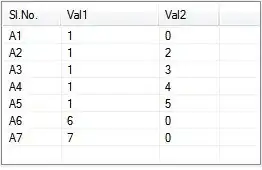

print(df)

Besides we also can use pyspark to read excel file. But we need to add jar com.crealytics:spark-excel in our environment. For more details, please refer to here and here

For example

Add package com.crealytics:spark-excel_2.12:0.13.1 via maven. Besides, please note that if you use scala 2.11, please add package com.crealytics:spark-excel_2.11:0.13.1

Code

spark._jsc.hadoopConfiguration().set("fs.azure.account.key.<account name>.dfs.core.windows.net",'<account key>')

print("use spark")

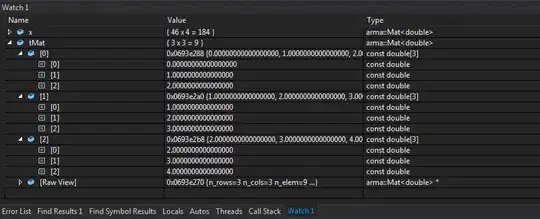

df=sqlContext.read.format("com.crealytics.spark.excel") \

.option("header", "true") \

.load('abfss://test@testadls05.dfs.core.windows.net/data/sample.xlsx')

df.show()