I've implemented VGG16 from scratch on both Keras and Pytorch to make sure that the implementation is exactly the same. I'm training it on CIFAR10 and the results are too different.

Here's my implementation in keras:

(x_train,y_train),(x_test,y_test)=cifar10.load_data()

x_train = x_train / 255

x_test = x_test / 255

y_train=np_utils.to_categorical(y_train,10)

y_test=np_utils.to_categorical(y_test,10)

model = Sequential()

model.add(Conv2D(input_shape=(32,32,3),filters=64,kernel_size=(3,3),padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=64,kernel_size=(3,3),padding="same"))

model.add(ReLU())

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=128, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=128, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=256, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(ReLU())

model.add(Conv2D(filters=512, kernel_size=(3,3), padding="same"))

model.add(MaxPool2D(pool_size=(2,2),strides=(2,2)))

model.add(Flatten())

model.add(Dense(units=4096,activation="relu"))

model.add(Dense(units=4096,activation="relu"))

model.add(Dense(units=10, activation="softmax"))

opt = Adam(lr=0.0001, epsilon=1e-06)

model.compile(optimizer=opt, loss=keras.losses.categorical_crossentropy, metrics=['accuracy'])

hist = model.fit(x_train, y_train,

epochs=50,

batch_size=100,

validation_data=(x_test, y_test)

)

And the same in pytorch:

num_epochs = 50

num_classes = 10

batch_size = 100

learning_rate = 0.0001

trans = transforms.ToTensor()

train_dataset = torchvision.datasets.CIFAR10(root="./dataset_pytorch", train=True, download=True, transform=trans)

test_dataset = torchvision.datasets.CIFAR10(root="./dataset_pytorch", train=False, download=True, transform=trans)

train_loader = DataLoader(dataset=train_dataset, batch_size=batch_size, shuffle=True)

test_loader = DataLoader(dataset=test_dataset, batch_size=batch_size, shuffle=False)

class ConvNet(nn.Module):

def __init__(self):

super(ConvNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer2 = nn.Sequential(

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer3 = nn.Sequential(

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer4 = nn.Sequential(

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer5 = nn.Sequential(

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.fc1 = nn.Sequential(

nn.Linear(512, 4096),

nn.ReLU())

self.fc2 = nn.Sequential(

nn.Linear(4096, 4096),

nn.ReLU())

self.fcout = nn.Sequential(

nn.Linear(4096, 10),

nn.Softmax())

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = self.layer5(out)

out = out.reshape(out.size(0), -1)

out = self.fc1(out)

out = self.fc2(out)

out = self.fcout(out)

return out

def weights_init(m):

if isinstance(m, nn.Conv2d) or isinstance(m, nn.Linear):

nn.init.xavier_uniform_(m.weight.data)

nn.init.zeros_(m.bias.data)

model = ConvNet()

model.apply(weights_init)

device = torch.device("cuda:0")#"cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate, eps=1e-6)

# Train the model

total_step = len(train_loader)

for epoch in range(num_epochs):

total = 0

correct = 0

for i, (images, labels) in enumerate(train_loader):

images = images.to(device)

labels = labels.to(device)

optimizer.zero_grad()

# Run the forward pass

outputs = model(images)

loss = criterion(outputs, labels)

# Backprop and perform Adam optimisation

loss.backward()

optimizer.step()

# Track the accuracy

total += labels.size(0)

_, predicted = torch.max(outputs.data, 1)

correct += (predicted == labels).sum().item()

print("Train")

print('Epoch [{}/{}], Accuracy: {:.2f}%'

.format(epoch + 1, num_epochs, (correct / total) * 100))

total_test = 0

correct_test = 0

for i, (images, labels) in enumerate(test_loader):

images = images.to(device)

labels = labels.to(device)

# Run the forward pass

outputs = model(images)

# Track the accuracy

total_test += labels.size(0)

_, predicted = torch.max(outputs.data, 1)

correct_test += (predicted == labels).sum().item()

print("Test")

print('Epoch [{}/{}], Accuracy: {:.2f}%'

.format(epoch + 1, num_epochs, (correct_test / total_test) * 100))

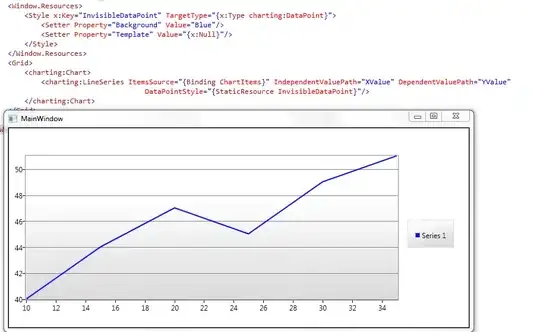

As you can see, I've adjusted it to use the same initializers in the network and parameters in the optimizer. However, the results are too different, as you can see in the following image.

Here's another execution of these same networks

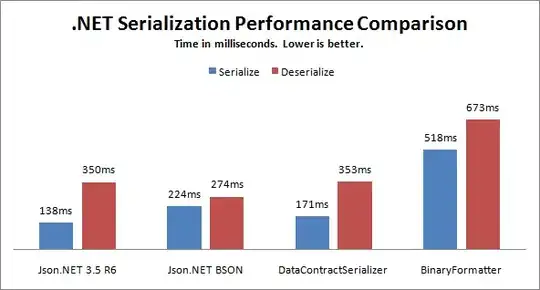

And I've tried also adding some batch normalization after every layer (between layer and activation). It kind of solves the problem, but not completely. Here's the plot:

Is there any reasonable explanation to this behavior or have I made any mistake in the code?