This is a clarification/restatement of a recent question/answer I posted. I'm wondering if my solution is the simplest or most efficient option.

Q: Separate column containing some missing values

I have a dataframe with three columns: df.location with comma-separated longitude-latitude coordinates in string form, df.target, a target variable with integers between 1 and 5 in currently formatted as floats, and df.null, a column that's mostly nan but also has a mix of latitude-longitude coordinates and floats between 1 and 5.

Here's an example df:

df = pd.DataFrame(

{'target': {0: nan, 1: nan, 2: nan, 3: nan, 4: nan, 5: 4.0, 6: 5.0, 7: 4.0, 8: 4.0, 9: 4.0},

'location': {0: nan, 1: nan, 2: nan, 3: nan, 4: nan, 5: '41.69230795,-72.26691314', 6: '41.70631764,-70.2868794', 7: '41.70687995,-70.28684036', 8: '41.70598417,-70.28671793', 9: '41.69220757,-70.26687248'},

'null': {0: '41.70477575,-70.28844073', 1: '2', 2: '41.70637091,-70.28704334', 3: '4', 4: '3', 5: nan, 6: nan, 7: nan, 8: nan, 9: nan}

}

)

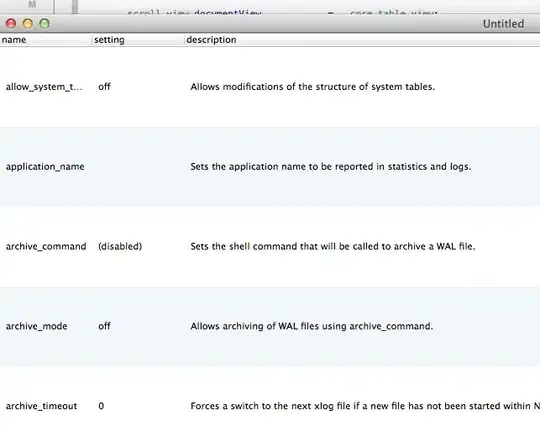

For every row where there's a non-missing value in df.null, the values in df.target and df.location are missing. (I have no idea how this came about, but I check the raw JSON I read into the Pandas Dataframe, and sure enough this null key pops up frequently when location and target are missing.) Here's a screenshot of a Seaborn heatmap from my Jupyter Notebook to illustrate:

Is it safe to assume some or all of the missing values in df.location and df.target are in df.null? If so, how to do move these values into the appropriate column based on whether they're the lat-lon strings or target floats?

A: Handling with fillna() and str.contains()

Here's my best answer so far — let me know what you think. Basically I just used fillna(value=df.null) to fill all the missing values in df.location and df.target:

df.target.fillna(

value=df.null,

inplace=True

)

df.location.fillna(

value=df.null,

inplace=True

)

Then I used regex to boolean filter through df.target and df.location and set all the inappropriate values to np.nan:

# Converting columns to type str so string methods work

df = df.astype(str)

# Using regex to change values that don't belong in column to NaN

regex = '[,]'

df.loc[df.target.str.contains(regex), 'target'] = np.nan

regex = '^\d\.?0?$'

df.loc[df.location.str.contains(regex), 'location'] = np.nan

# Returning `df.level` to float datatype (str is the correct

# datatype for `df.location`

df.target.astype(float)

Is there a better way to do this?

Edit: Changed fillna() cell code so that it works.