Im working on StockX scraping some products. There is a popup element called sales history where I click the text link and then loop through all the sales history through the "Load More" button.

My problem is that for the most part this works fine as I loop through URL's, but occasionally it will get hung up for a really long time where the button is present, but is not clickable (hasn't reached bottom either) so I believe it just stays in the loop. Any help with either breaking this loop or some workaround in Selenium would be awesome thank you!!

This is the function that I use to open the sales history information:

url = "https://stockx.com/adidas-ultra-boost-royal-blue"

driver = webdriver.Firefox()

driver.get(url)

content = driver.page_source

soup = BeautifulSoup(content, 'lxml')

def get_sales_history():

""" get sales history data from sales history table interaction """

sales_hist_data = []

try:

# click 'View All Sales' text link

View_all_sales_button = driver.find_element_by_xpath(".//div[@class='market-history-sales']/a[@class='all']")

View_all_sales_button.click()

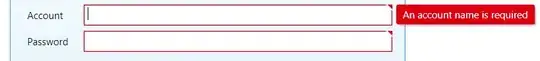

# log in

login_button = driver.find_element_by_id("nav-signup")

login_button.click

# add username

username = driver.find_element_by_id("email-login")

username.clear()

username.send_keys("email@email.com")

# add password

password = driver.find_element_by_name("password-login")

password.clear()

password.send_keys("password")

except:

pass

while True:

try:

# If 'Load More' Appears Click Button

sales_hist_load_more_button = driver.find_element_by_xpath(

".//div[@class='latest-sales-container']/button[@class='button button-block button-white']")

sales_hist_load_more_button.click()

except:

#print("Reached bottom of page")

break

content = driver.page_source

soup = BeautifulSoup(content, 'lxml')

div = soup.find('div', class_='latest-sales-container')

for td in div.find_all('td'):

sales_hist_data.append(td.text)

return sales_hist_data