One of the biggest challenges in tesseract OCR text recognition is the uneven illumination of images. I need an algorithm that can decide the image is containing uneven illuminations or not.

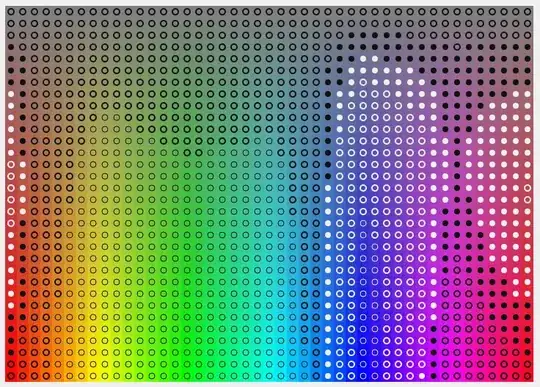

Test Images

I Attached the images of no illumination image, glare image( white-spotted image) and shadow containing image.

If we give an image to the algorithm, the algorithm should divide into two class like

- No uneven illumination - our

no illumination imagewill fall into this category. - Uneven illumination - Our

glare image( white-spotted image),shadow containing imagewill fall in this category.

No Illumination Image - Category A

UnEven Illumination Image (glare image( white-spotted image)) Category B

Uneven Illumination Image (shadow containing an image) Category B

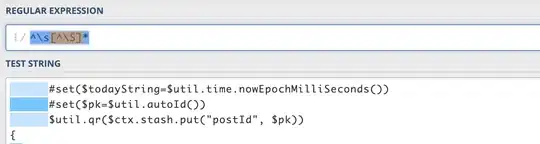

Initial Approach

Change colour space to HSV

Histogram analysis of the value channel of HSV to identify the uneven illumination.

Instead of the first two steps, we can use the perceived brightness channel instead of the value channel of HSV

Set a low threshold value to get the number of pixels which are less than the low threshold

Set a high threshold value to get the number of pixels which are higher than the high threshold

percentage of low pixels values and percentage of high pixel values to detect uneven lightning condition (The setting threshold for percentage as well )

But I could not find big similarities between uneven illumination images. I just found there are some pixels that have low value and some pixels have high value with histogram analysis.

Basically what I feel is if setting some threshold values in the low and to find how many pixels are less than the low threshold and setting some high threshold value to find how many pixels are greater than that threshold. with the pixels counts can we come to a conclusion to detect uneven lightning conditions in images? Here we need to finalize two threshold values and the percentage of the number of pixels to come to the conclusion.

def show_hist_v(img_path):

img = cv2.imread(img_path)

hsv_img = cv2.cvtColor(img, cv2.COLOR_BGR2HSV)

h,s,v = cv2.split(hsv_img)

histr =cv2.calcHist(v, [0], None, [255],[0,255])

plt.plot(histr)

plt.show()

low_threshold =np.count_nonzero(v < 50)

high_threshold =np.count_nonzero(v >200)

total_pixels = img.shape[0]* img.shape[1]

percenet_low =low_threshold/total_pixels*100

percenet_high =high_threshold/total_pixels*100

print("Total Pixels - {}\n Pixels More than 200 - {} \n Pixels Less than 50 - {} \n Pixels percentage more than 200 - {} \n Pixel spercentage less than 50 - {} \n".format(total_pixels,high_threshold,low_threshold,percenet_low,percenet_high))

return total_pixels,high_threshold,low_threshold,percenet_low,percenet_high

So can someone improve my initial approach or give better than this approach to detect uneven illumination in images for general cases?

Also, I tried perceived brightness instead of the value channel since the value channel takes the maximum of (b,g,r) values the perceive brightness is a good choice as I think

def get_perceive_brightness( float_img):

float_img = np.float64(float_img) # unit8 will make overflow

b, g, r = cv2.split(float_img)

float_brightness = np.sqrt(

(0.241 * (r ** 2)) + (0.691 * (g ** 2)) + (0.068 * (b ** 2)))

brightness_channel = np.uint8(np.absolute(float_brightness))

return brightness_channel

def show_hist_v(img_path):

img = cv2.imread(img_path)

v = get_perceive_brightness(img)

histr =cv2.calcHist(v, [0], None, [255],[0,255])

plt.plot(histr)

plt.show()

low_threshold =np.count_nonzero(v < 50)

high_threshold =np.count_nonzero(v >200)

total_pixels = img.shape[0]* img.shape[1]

percenet_low =low_threshold/total_pixels*100

percenet_high =high_threshold/total_pixels*100

print("Total Pixels - {}\n Pixels More than 200 - {} \n Pixels Less than 50 - {} \n Pixels percentage more than 200 - {} \n Pixel spercentage less than 50 - {} \n".format(total_pixels,high_threshold,low_threshold,percenet_low,percenet_high))

return total_pixels,high_threshold,low_threshold,percenet_low,percenet_high

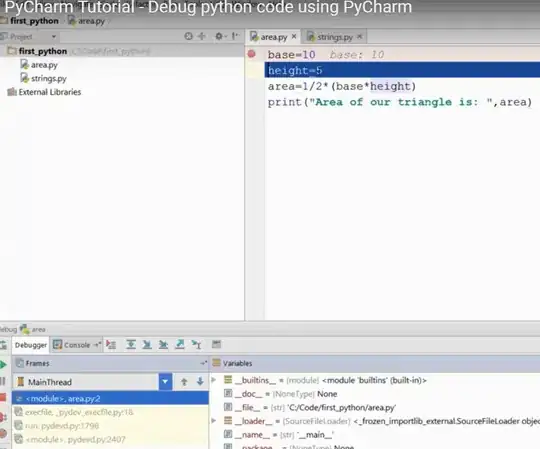

Histogram analysis of perceived brightness channel

As Ahmet suggested.

def get_percentage_of_binary_pixels(img=None, img_path=None):

if img is None:

if img_path is not None:

gray_img = cv2.imread(img_path, 0)

else:

return "No img or img_path"

else:

print(img.shape)

if len(img.shape) > 2:

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

else:

gray_img = img

h, w = gray_img.shape

guassian_blur = cv2.GaussianBlur(gray_img, (5, 5), 0)

thresh_value, otsu_img = cv2.threshold(guassian_blur, 0, 255,

cv2.THRESH_BINARY + cv2.THRESH_OTSU)

cv2.imwrite("binary/{}".format(img_path.split('/')[-1]), otsu_img)

black_pixels = np.count_nonzero(otsu_img == 0)

# white_pixels = np.count_nonzero(otsu_img == 255)

black_pixels_percentage = black_pixels / (h * w) * 100

# white_pixels_percentage = white_pixels / (h * w) * 100

return black_pixels_percentage

when we get more than 35% of black_ pixels percentage with otsu binarization, we can detect the uneven illumination images around 80 percentage. When the illumination occurred in a small region of the image, the detection fails.

Thanks in advance