I have a bunch of aerial images and I want to stitch them together (I don't want to rely on orthomap software such as ODM).

I am following this methodology: Stitch multiple images using OpenCV (Python)

To find the matching points between two close images (based on GPS), I use SIFT features:

cv2.SIFT_create()detector.detectAndCompute()cv2.FlannBasedMatcher()matcher.knnMatch()- https://docs.opencv.org/3.4/d5/d6f/tutorial_feature_flann_matcher.html

Once I have the set of points matching the two images, I find a transform with cv2.findHomography() and I apply this transform with cv2.warpPerspective().

The problem is that once I start to add more and more images, the perspective becomes worse and worse. Are there similar functions to findHomography and warpPerspective that do not use perspective?

I saw affineTransform but I'm not sure how to use and if it's appropriate here.

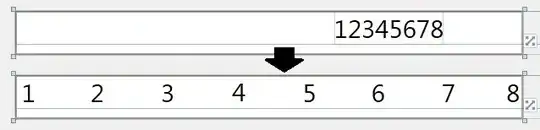

Here is the result I get at the moment: