I'm reading a parquet file generated from a MySQL table using AWS DMS service. This table has a field with the type Point (WKB). When I read this parquet file, Spark recognizes it as binary type, as per the code below:

file_dataframe = sparkSession.read.format('parquet')\

.option('encoding', 'UTF-8')\

.option('mode', 'FAILFAST')\

.load(file_path)

file_dataframe.schema

And this is the result:

StructType(List(StructField(id,LongType,true),...,StructField(coordinate,BinaryType,true),...))

I tried casting the column to string, but this is what I get:

file_dataframe = file_dataframe.withColumn('coordinates_str', file_dataframe.coordinate.astype("string"))

file_dataframe.select('coordinates_str').show()

+--------------------+

| coordinates_str|

+--------------------+

|U�...|

|U�...|

|U�...|

|U�...|

|@G

U�...|

|@G

U�...|

|@G

U�...|

| G

U�...|

| G

U�...|

| G

U�...|

| G

U�...|

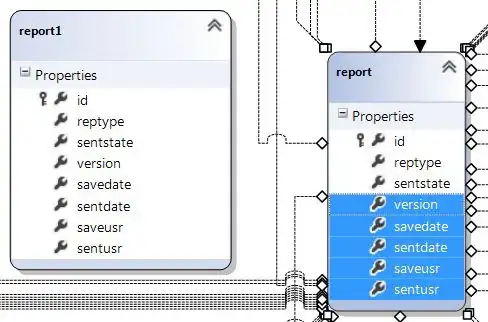

This field in MySQL looks like this. If I right click the BLOB I can see its value in the pop-up window.

What I'm interested in doing is getting the POINT (-84.1370849609375 9.982019424438477) that I see in the MySQL viewer as a string column in a Spark Dataframe. Is this possible? I've been Googling about it, but haven't been able to find something that gets me in the right track.